Learning Deep Interleaved Networks with Asymmetric Co-Attention for Image Restoration

2021-03-02 17:30

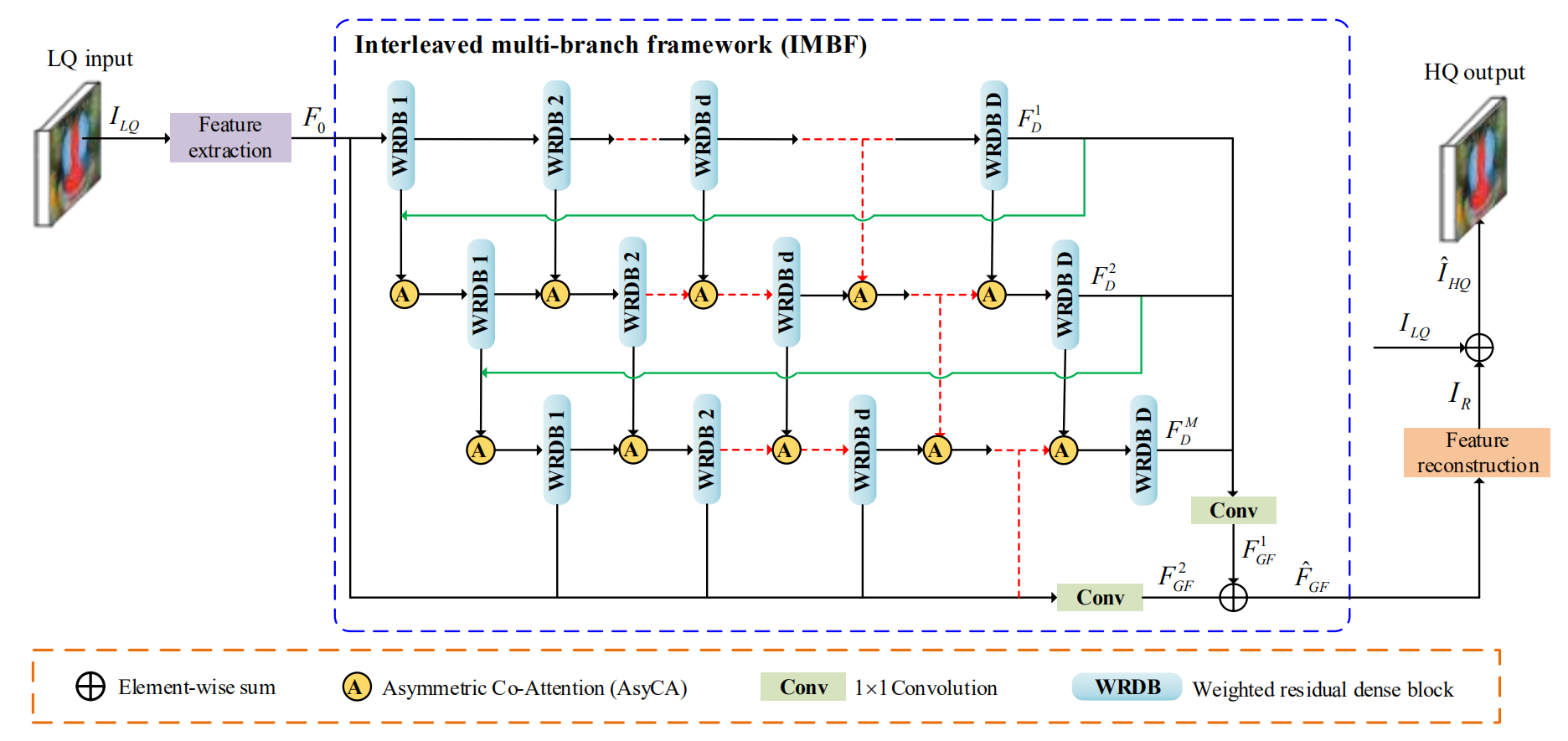

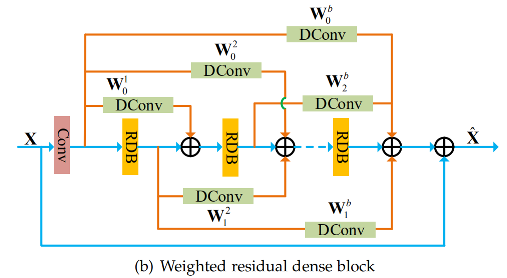

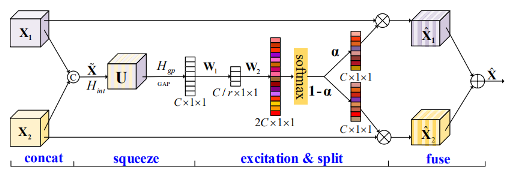

标签:技术 直接 const gre framework enc nal important node 论文:https://arxiv.org/abs/2010.15689 代码:https://github.com/lifengshiwo/DIN 作者提出当前图像修复方法中非常关键的问题是:Hierarchical features under different receptive fields can provide abundant information. It is crucial to incorporate features from multiple layers for image restoration tasks. 当前方法主要采用两个方法解决这个问题:第一种是 Global dense connections. 这种方法的问题是 the subsequent convolutional layers don’t have access to receive information from preceding layers. 第二种是 Local dense connections and global fusion. 这种方法的问题是 they only utilize dense connections to fuse hierarchical features from all the convolutional layers within each block, the information from these blocks are only combined at the tail of networks. By this way, preceding low-level features lack full incorporation with later high-level features. 为不同感受野特征的利用问题,作者提出了 deep interleaved network (DIN) that aggregates multi-level features and learns how information at different states should be combined for image restoration tasks. 主要从两个方面丰富特征表达: 模块内部:We propose weighted residual dense block (WRDB) composed of multiple residual dense blocks in which different weighted parameters are assigned to different inputs for more precise features aggregation and propagation. asymmetric co-attention 多分支网络交互: Asymmetric co-attention (AsyCA) is proposed and attached to the interleaved nodes to adaptively emphasize informative features from different states, which can effectively improve the discriminative ability of networks for high-frequency details recovery. DIN 网络包括三部分:feature extraction,interleaved multi-branch framework, and feature reconstruction. 在实现上,使用了4个分支 其中包括两个关键的模块:WRDB 和 AsyCA 。 WRDB模块如下图所示。和 DenseNet 类似,作者指出,dense connections can reuse the feature maps from preceding layers and improve the information flow through networks。作者对DenseNet 的结构进行了改进,并不是直接连接,而是加了权重。图中DConv表示 depth-wise conv,卷积核大小为 1x1 ,因此,计算量比较低。 AsyCA 模块如下图所示,其实使用的是 SKNet 的结构。作者指出,该模块可以 adaptively emphasizing important information from different states. Learning Deep Interleaved Networks with Asymmetric Co-Attention for Image Restoration 标签:技术 直接 const gre framework enc nal important node 原文地址:https://www.cnblogs.com/gaopursuit/p/14323109.html

1. Introduction

2. DIN网络架构

上一篇:8. CSS3 新增

下一篇:PHP没有定时器?

文章标题:Learning Deep Interleaved Networks with Asymmetric Co-Attention for Image Restoration

文章链接:http://soscw.com/essay/59141.html