百度地图API获取数据

2021-06-06 02:05

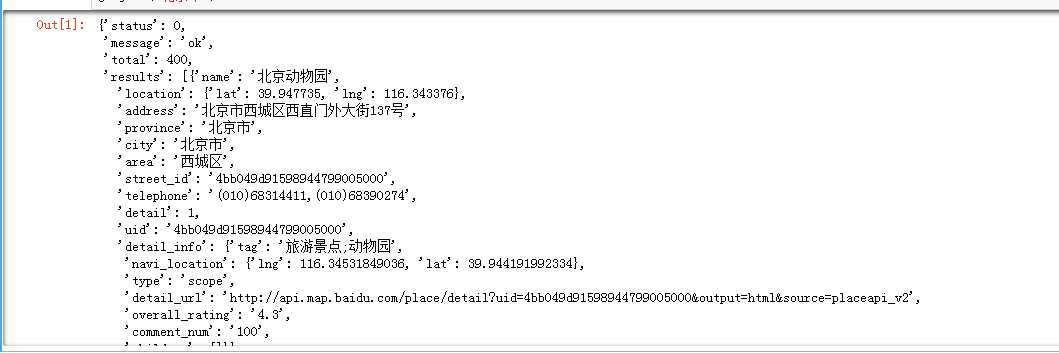

标签:吉林省 nbsp pass tag 江西 服务 ams sel create 目前,大厂的服务范围越来越广,提供的数据信息也是比较全的,在生活服务,办公领域,人工智能等方面都全面覆盖,相对来说,他们的用户基数大,通过用户获取的信息也是巨大的。除了百度提供api,国内提供免费API获取数据的还有很多,包括新浪、豆瓣电影、饿了么、阿里、腾讯等今天使用百度地图API来请求我们想要的数据。 第一步.注册百度开发者账号 注册成功后就可以获取到应用服务AK也就是API秘钥,这个是最重要的,应用名称可以随便取,如果是普通用户一天只有2000调用限额,认证用户一天有10万次调用限额 在百度地图web服务API文档中我可以看见提供的接口和相关参数,其中就有我们要获取的AK参数,使用的GET请求 一.下面我们尝试使用API获取获取北京市的城市公园数据,需要配置参数 二.获取所有拥有公园的城市 保存到文件 三.获取所有城市的公园数据,在从各个城市获取数据之前,先在MySQL建立baidumap数据库,用来存放所有的数据 四.获取所有公园的详细信息 baidumap数据库已经有了city这个表格,存储了所有城市的公园数据,但是这个数据比较粗糙,接下来我们使用百度地图检索服务获取没一个公园的详情 百度地图API获取数据 标签:吉林省 nbsp pass tag 江西 服务 ams sel create 原文地址:https://www.cnblogs.com/jzxs/p/10795246.html

import requests

import json

def getjson(loc):

headers = {‘User-Agent‘ : ‘Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6‘}

pa = {

‘q‘: ‘公园‘,

‘region‘: loc,

‘scope‘: ‘2‘,

‘page_size‘: 20,

‘page_num‘: 0,

‘output‘: ‘json‘,

‘ak‘: ‘填写自己的AK‘

}

r = requests.get("http://api.map.baidu.com/place/v2/search", params=pa, headers= headers)

decodejson = json.loads(r.text)

return decodejson

getjson(‘北京市‘)

import requests

import json

def getjson(loc):

headers = {‘User-Agent‘ : ‘Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6‘}

pa = {

‘q‘: ‘公园‘,

‘region‘: loc,

‘scope‘: ‘2‘,

‘page_size‘: 20,

‘page_num‘: 0,

‘output‘: ‘json‘,

‘ak‘: ‘填写自己的AK‘

}

r = requests.get("http://api.map.baidu.com/place/v2/search", params=pa, headers= headers)

decodejson = json.loads(r.text)

return decodejson

province_list = [‘江苏省‘, ‘浙江省‘, ‘广东省‘, ‘福建省‘, ‘山东省‘, ‘河南省‘, ‘河北省‘, ‘四川省‘, ‘辽宁省‘, ‘云南省‘,

‘湖南省‘, ‘湖北省‘, ‘江西省‘, ‘安徽省‘, ‘山西省‘, ‘广西壮族自治区‘, ‘陕西省‘, ‘黑龙江省‘, ‘内蒙古自治区‘,

‘贵州省‘, ‘吉林省‘, ‘甘肃省‘, ‘新疆维吾尔自治区‘, ‘海南省‘, ‘宁夏回族自治区‘, ‘青海省‘, ‘西藏自治区‘]

for eachprovince in province_list:

decodejson = getjson(eachprovince)

#print(decodejson["results"])

for eachcity in decodejson.get(‘results‘):

print(eachcity)

city = eachcity[‘name‘]

#print(city)

num = eachcity[‘num‘]

output = ‘\t‘.join([city, str(num)]) + ‘\r\n‘

with open(‘cities.txt‘, "a+", encoding=‘utf-8‘) as f:

f.write(output)

f.close()

import requests

import json

def getjson(loc):

headers = {‘User-Agent‘ : ‘Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6‘}

pa = {

‘q‘: ‘公园‘,

‘region‘: loc,

‘scope‘: ‘2‘,

‘page_size‘: 20,

‘page_num‘: 0,

‘output‘: ‘json‘,

‘ak‘: ‘填写自己的AK‘

}

r = requests.get("http://api.map.baidu.com/place/v2/search", params=pa, headers= headers)

decodejson = json.loads(r.text)

return decodejson

decodejson = getjson(‘全国‘)

six_cities_list = [‘北京市‘,‘上海市‘,‘重庆市‘,‘天津市‘,‘香港特别行政区‘,‘澳门特别行政区‘,]

for eachprovince in decodejson[‘results‘]:

city = eachprovince[‘name‘]

num = eachprovince[‘num‘]

if city in six_cities_list:

output = ‘\t‘.join([city, str(num)]) + ‘\r\n‘

with open(‘cities789.txt‘, "a+", encoding=‘utf-8‘) as f:

f.write(output)

f.close()

#coding=utf-8

import pymysql

conn= pymysql.connect(host=‘localhost‘ , user=‘root‘, passwd=‘*******‘, db =‘baidumap‘, charset="utf8")

cur = conn.cursor()

sql = """CREATE TABLE city (

id INT NOT NULL AUTO_INCREMENT,

city VARCHAR(200) NOT NULL,

park VARCHAR(200) NOT NULL,

location_lat FLOAT,

location_lng FLOAT,

address VARCHAR(200),

street_id VARCHAR(200),

uid VARCHAR(200),

created_time TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (id)

);"""

cur.execute(sql)

cur.close()

conn.commit()

conn.close()

city_list = list()

with open("cities.txt", ‘r‘, encoding=‘utf-8‘) as txt_file:

for eachLine in txt_file:

if eachLine != "" and eachLine != "\n":

fields = eachLine.split("\t")

city = fields[0]

city_list.append(city)

txt_file.close()

#接下来爬取每个城市的数据,并将其加入city数据表中import requests

import json

import pymysql

conn= pymysql.connect(host=‘localhost‘ , user=‘root‘, passwd=‘********‘, db =‘baidumap‘, charset="utf8")

cur = conn.cursor()

def getjson(loc,page_num):

headers = {‘User-Agent‘ : ‘Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6‘}

pa = {

‘q‘: ‘公园‘,

‘region‘: loc,

‘scope‘: ‘2‘,

‘page_size‘: 20,

‘page_num‘: page_num,

‘output‘: ‘json‘,

‘ak‘: ‘填写自己的AK‘

}

r = requests.get("http://api.map.baidu.com/place/v2/search", params=pa, headers= headers)

decodejson = json.loads(r.text)

return decodejson

for eachcity in city_list:

not_last_page = True

page_num = 0

while not_last_page:

decodejson = getjson(eachcity, page_num)

#print (eachcity, page_num)

if decodejson[‘results‘]:

for eachone in decodejson[‘results‘]:

try:

park = eachone[‘name‘]

except:

park = None

try:

location_lat = eachone[‘location‘][‘lat‘]

except:

location_lat = None

try:

location_lng = eachone[‘location‘][‘lng‘]

except:

location_lng = None

try:

address = eachone[‘address‘]

except:

address = None

try:

street_id = eachone[‘street_id‘]

except:

street_id = None

try:

uid = eachone[‘uid‘]

except:

uid = None

sql = """INSERT INTO baidumap.city

(city, park, location_lat, location_lng, address, street_id, uid)

VALUES

(%s, %s, %s, %s, %s, %s, %s);"""

cur.execute(sql, (eachcity, park, location_lat, location_lng, address, street_id, uid,))

conn.commit()

page_num += 1

else:

not_last_page = False

cur.close()

conn.close()

#coding=utf-8

import pymysql

conn= pymysql.connect(host=‘localhost‘ , user=‘root‘, passwd=‘*******‘, db =‘baidumap‘, charset="utf8")

cur = conn.cursor()

sql = """CREATE TABLE park (

id INT NOT NULL AUTO_INCREMENT,

park VARCHAR(200) NOT NULL,

location_lat FLOAT,

location_lng FLOAT,

address VARCHAR(200),

street_id VARCHAR(200),

telephone VARCHAR(200),

detail INT,

uid VARCHAR(200),

tag VARCHAR(200),

type VARCHAR(200),

detail_url VARCHAR(800),

price INT,

overall_rating FLOAT,

image_num INT,

comment_num INT,

shop_hours VARCHAR(800),

alias VARCHAR(800),

keyword VARCHAR(800),

scope_type VARCHAR(200),

scope_grade VARCHAR(200),

description VARCHAR(9000),

created_time TIMESTAMP DEFAULT CURRENT_TIMESTAMP,

PRIMARY KEY (id)

);"""

cur.execute(sql)

cur.close()

conn.commit()

conn.close()

import requests

import json

import pymysql

conn= pymysql.connect(host=‘localhost‘ , user=‘root‘, passwd=‘********‘, db =‘baidumap‘, charset="utf8")

cur = conn.cursor()

sql = "Select uid from baidumap.city where id > 0;"

cur.execute(sql)

conn.commit()

results = cur.fetchall()

cur.close()

conn.close()

import requests

import json

import pymysql

conn= pymysql.connect(host=‘localhost‘ , user=‘root‘, passwd=‘********‘, db =‘baidumap‘, charset="utf8")

cur = conn.cursor()

sql = "Select uid from baidumap.city where id > 0;"

cur.execute(sql)

conn.commit()

results = cur.fetchall()

#print(results)

def getjson(uid):

headers = {‘User-Agent‘ : ‘Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US; rv:1.9.1.6) Gecko/20091201 Firefox/3.5.6‘}

pa = {

‘uid‘: uid,

‘scope‘: ‘2‘,

‘output‘: ‘json‘,

‘ak‘: ‘填写自己的AK‘

}

r = requests.get("http://api.map.baidu.com/place/v2/search", params=pa, headers= headers)

decodejson = json.loads(r.text)

return decodejson

for row in results:

print(row)

uid = row[0]

decodejson = getjson(uid)

#print (uid)

info = decodejson[‘result‘]

try:

park = info[‘name‘]

except:

park = None

try:

location_lat = info[‘location‘][‘lat‘]

except:

location_lat = None

try:

location_lng = info[‘location‘][‘lng‘]

except:

location_lng = None

try:

address = info[‘address‘]

except:

address = None

try:

street_id = info[‘street_id‘]

except:

street_id = None

try:

telephone = info[‘telephone‘]

except:

telephone = None

try:

detail = info[‘detail‘]

except:

detail = None

try:

tag = info[‘detail_info‘][‘tag‘]

except:

tag = None

try:

detail_url = info[‘detail_info‘][‘detail_url‘]

except:

detail_url = None

try:

type = info[‘detail_info‘][‘type‘]

except:

type = None

try:

overall_rating = info[‘detail_info‘][‘overall_rating‘]

except:

overall_rating = None

try:

image_num = info[‘detail_info‘][‘image_num‘]

except:

image_num = None

try:

comment_num = info[‘detail_info‘][‘comment_num‘]

except:

comment_num = None

try:

key_words = ‘‘

key_words_list = info[‘detail_info‘][‘di_review_keyword‘]

for eachone in key_words_list:

key_words = key_words + eachone[‘keyword‘] + ‘/‘

except:

key_words = None

try:

shop_hours = info[‘detail_info‘][‘shop_hours‘]

except:

shop_hours = None

try:

alias = info[‘detail_info‘][‘alias‘]

except:

alias = None

try:

scope_type = info[‘detail_info‘][‘scope_type‘]

except:

scope_type = None

try:

scope_grade = info[‘detail_info‘][‘scope_grade‘]

except:

scope_grade = None

try:

description = info[‘detail_info‘][‘description‘]

except:

description = None

sql = """INSERT INTO baidumap.park

(park, location_lat, location_lng, address, street_id, uid, telephone, detail, tag, detail_url, type, overall_rating, image_num,

comment_num, keyword, shop_hours, alias, scope_type, scope_grade, description)

VALUES

(%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s);"""

cur.execute(sql, (park, location_lat, location_lng, address, street_id, uid, telephone, detail, tag, detail_url,

type, overall_rating, image_num, comment_num, key_words, shop_hours, alias, scope_type, scope_grade, description,))

conn.commit()

cur.close()

conn.close()