[TimLinux] scrapy 在Windows平台的安装

2021-07-10 02:06

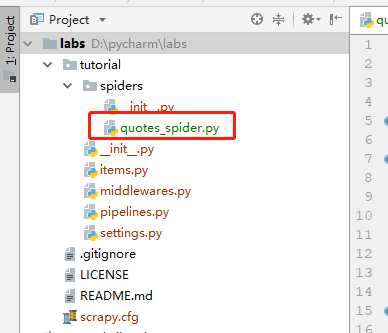

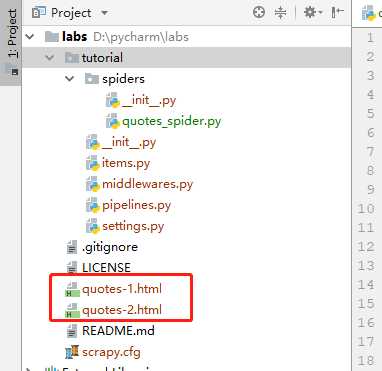

标签:redirect 项目 init files fail scripts 直接下载 define save 这个不去细说,官网直接下载,安装即可,我自己选择的版本是 Python 3.6.5 x86_64bit windows版本。 我用的windows 10系统,操作步骤,‘此电脑’ 上鼠标右键,选择 ’属性’, 在弹出的面板中,选择 ‘高级系统设置’, 新窗口中点击 ’高级‘ 标签页,然后点击 ’环境变量‘, 在用户环境变量中,选中 path(没有就添加),然后把:C:\Python365\Scripts;C:\Python365;添加到该变量值中即可。 采用的安装方式为pip, 在打开的cmd窗口中,输入: pip install scrapy,这时候估计会遇到如下错误: 这是因为没有安装 visual studio c++ 2015, 但是其实我们不需要,另外这里给出的链接也不是正确可以访问的链接,这时候大家可以到这个网站上去下载 Twisted 的whl文件来直接安装即可: https://www.lfd.uci.edu/~gohlke/pythonlibs/ https://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted 在这个页面,大家可以选择合适的包进行下载(我选的是:Twisted?18.7.0?cp36?cp36m?win_amd64.whl): 下载完成之后,执行:pip install Twisted-18.7.0-cp36-cp36m-win_amd64.whl,这个步骤完成之后,继续执行:pip install scrapy,就能够完成剩余的安装任务了。 学习、工作最好有跟踪,为此建立自己的github仓库: https://github.com/timscm/myscrapy 官方文档上就给出了一简单的示例,这里不做解释,只是尝试是否能够正常运行。 https://docs.scrapy.org/en/latest/intro/tutorial.html 文件结构如图所示: tutorial/spiders/quotes_spider.py内容如下: 运行需要在cmd窗口中: 出错了,提升没有win32api,这是需要安装一个pypiwin32包: 然后再次运行: 咱们看下保存的文件: 内容: [TimLinux] scrapy 在Windows平台的安装 标签:redirect 项目 init files fail scripts 直接下载 define save 原文地址:https://www.cnblogs.com/timlinux/p/9692319.html1. 安装Python

2. 配置PATH

3. 安装scrapy

building ‘twisted.test.raiser‘ extension

error: Microsoft Visual C++ 14.0 is required. Get it with "Microsoft Visual C++ Build Tools":

http://landinghub.visualstudio.com/visual-cpp-build-tools

----------------------------------------

Command "C:\Python365\python.exe -u -c "import setuptools, tokenize;__file__=‘C:\\Users\\admin\\AppData\\Local

\\Temp\\pip-install-fkvobf_0\\Twisted\\setup.py‘;f=getattr(tokenize, ‘open‘, open)(__file__);code=f.read().replace(

‘\r\n‘, ‘\n‘);f.close();exec(compile(code, __file__, ‘exec‘))" install --record C:\Users\admin\AppData\Local

\Temp\pip-record-6z5m4wfj\install-record.txt --single-version-externally-managed --compile"

failed with error code 1 in C:\Users\admin\AppData\Local\Temp\pip-install-fkvobf_0\Twisted\Twisted, an event-driven networking engine.

Twisted?18.7.0?cp27?cp27m?win32.whl

Twisted?18.7.0?cp27?cp27m?win_amd64.whl

Twisted?18.7.0?cp34?cp34m?win32.whl

Twisted?18.7.0?cp34?cp34m?win_amd64.whl

Twisted?18.7.0?cp35?cp35m?win32.whl

Twisted?18.7.0?cp35?cp35m?win_amd64.whl

Twisted?18.7.0?cp36?cp36m?win32.whl

Twisted?18.7.0?cp36?cp36m?win_amd64.whl

Twisted?18.7.0?cp37?cp37m?win32.whl

Twisted?18.7.0?cp37?cp37m?win_amd64.whl

Installing collected packages: scrapy

Successfully installed scrapy-1.5.1

4. github库

5. 示例

PS D:\pycharm\labs> scrapy

Scrapy 1.5.1 - no active project

Usage:

scrapy

5.1. 创建项目

PS D:\pycharm\labs> scrapy startproject tutorial .

New Scrapy project ‘tutorial‘, using template directory ‘c:\\python365\\lib\\site-packages\\scrapy\\templates\\project‘, created in:

D:\pycharm\labs

You can start your first spider with:

cd .

scrapy genspider example example.com

PS D:\pycharm\labs> dir

目录: D:\pycharm\labs

Mode LastWriteTime Length Name

---- ------------- ------ ----

d----- 2018/9/23 10:58 .idea

d----- 2018/9/23 11:46 tutorial

-a---- 2018/9/23 11:05 1307 .gitignore

-a---- 2018/9/23 11:05 11558 LICENSE

-a---- 2018/9/23 11:05 24 README.md

-a---- 2018/9/23 11:46 259 scrapy.cfg

5.2. 创建spider

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

def start_requests(self):

urls = [

‘http://quotes.toscrape.com/page/1/‘,

‘http://quotes.toscrape.com/page/2/‘,

]

for url in urls:

yield scrapy.Request(url=url, callback=self.parse)

def parse(self, response):

page = response.url.split("/")[-2]

filename = ‘quotes-%s.html‘ % page

with open(filename, ‘wb‘) as f:

f.write(response.body)

self.log(‘Saved file %s‘ % filename)

5.3. 运行

PS D:\pycharm\labs> scrapy crawl quotes

2018-09-23 11:51:41 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: tutorial)

2018-09-23 11:51:41 [scrapy.utils.log] INFO: Versions: lxml 4.2.5.0, libxml2 2.9.5,

cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.5 (v3.6.5:f59c0932b4,

Mar 28 2018, 17:00:18) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 18.0.0 (OpenSSL 1.1.0i

14 Aug 2018), cryptography 2.3.1, Platform Windows-10-10.0.17134-SP0

2018-09-23 11:51:41 [scrapy.crawler] INFO: Overridden settings: {‘BOT_NAME‘: ‘tutorial‘,

‘NEWSPIDER_MODULE‘: ‘tutorial.spiders‘, ‘ROBOTSTXT_OBEY‘: True, ‘SPIDER_MODULES‘: [‘tutorial.spiders‘]}

2018-09-23 11:51:41 [scrapy.middleware] INFO: Enabled extensions:

[‘scrapy.extensions.corestats.CoreStats‘,

‘scrapy.extensions.telnet.TelnetConsole‘,

‘scrapy.extensions.logstats.LogStats‘]

Unhandled error in Deferred:

2018-09-23 11:51:41 [twisted] CRITICAL: Unhandled error in Deferred:

2018-09-23 11:51:41 [twisted] CRITICAL:

Traceback (most recent call last):

File "c:\python365\lib\site-packages\twisted\internet\defer.py", line 1418, in _inlineCallbacks

result = g.send(result)

File "c:\python365\lib\site-packages\scrapy\crawler.py", line 80, in crawl

self.engine = self._create_engine()

File "c:\python365\lib\site-packages\scrapy\crawler.py", line 105, in _create_engine

return ExecutionEngine(self, lambda _: self.stop())

File "c:\python365\lib\site-packages\scrapy\core\engine.py", line 69, in __init__

self.downloader = downloader_cls(crawler)

File "c:\python365\lib\site-packages\scrapy\core\downloader\__init__.py", line 88, in __init__

self.middleware = DownloaderMiddlewareManager.from_crawler(crawler)

File "c:\python365\lib\site-packages\scrapy\middleware.py", line 58, in from_crawler

return cls.from_settings(crawler.settings, crawler)

File "c:\python365\lib\site-packages\scrapy\middleware.py", line 34, in from_settings

mwcls = load_object(clspath)

File "c:\python365\lib\site-packages\scrapy\utils\misc.py", line 44, in load_object

mod = import_module(module)

File "c:\python365\lib\importlib\__init__.py", line 126, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "PS D:\pycharm\labs> pip install pypiwin32

Collecting pypiwin32

Downloading https://files.pythonhosted.org/packages/d0/1b/

2f292bbd742e369a100c91faa0483172cd91a1a422a6692055ac920946c5/

pypiwin32-223-py3-none-any.whl

Collecting pywin32>=223 (from pypiwin32)

Downloading https://files.pythonhosted.org/packages/9f/9d/

f4b2170e8ff5d825cd4398856fee88f6c70c60bce0aa8411ed17c1e1b21f/

pywin32-223-cp36-cp36m-win_amd64.whl (9.0MB)

100% |████████████████████████████████| 9.0MB 1.1MB/s

Installing collected packages: pywin32, pypiwin32

Successfully installed pypiwin32-223 pywin32-223

PS D:\pycharm\labs>PS D:\pycharm\labs> scrapy crawl quotes

2018-09-23 11:53:05 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: tutorial)

2018-09-23 11:53:05 [scrapy.utils.log] INFO: Versions: lxml 4.2.5.0, libxml2 2.9.5,

cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.5 (v3.6.5:f59c0932b4,

Mar 28 2018, 17:00:18) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 18.0.0 (OpenSSL 1.1.0i

14 Aug 2018), cryptography 2.3.1, Platform Windows-10-10.0.17134-SP0

2018-09-23 11:53:05 [scrapy.crawler] INFO: Overridden settings: {‘BOT_NAME‘: ‘tutorial‘,

‘NEWSPIDER_MODULE‘: ‘tutorial.spiders‘, ‘ROBOTSTXT_OBEY‘: True, ‘SPIDER_MODULES‘: [‘tutorial.spiders‘]}

2018-09-23 11:53:06 [scrapy.middleware] INFO: Enabled extensions:

[‘scrapy.extensions.corestats.CoreStats‘,

‘scrapy.extensions.telnet.TelnetConsole‘,

‘scrapy.extensions.logstats.LogStats‘]

2018-09-23 11:53:06 [scrapy.middleware] INFO: Enabled downloader middlewares:

[‘scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware‘,

‘scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware‘,

‘scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware‘,

‘scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware‘,

‘scrapy.downloadermiddlewares.useragent.UserAgentMiddleware‘,

‘scrapy.downloadermiddlewares.retry.RetryMiddleware‘,

‘scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware‘,

‘scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware‘,

‘scrapy.downloadermiddlewares.redirect.RedirectMiddleware‘,

‘scrapy.downloadermiddlewares.cookies.CookiesMiddleware‘,

‘scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware‘,

‘scrapy.downloadermiddlewares.stats.DownloaderStats‘]

2018-09-23 11:53:06 [scrapy.middleware] INFO: Enabled spider middlewares:

[‘scrapy.spidermiddlewares.httperror.HttpErrorMiddleware‘,

‘scrapy.spidermiddlewares.offsite.OffsiteMiddleware‘,

‘scrapy.spidermiddlewares.referer.RefererMiddleware‘,

‘scrapy.spidermiddlewares.urllength.UrlLengthMiddleware‘,

‘scrapy.spidermiddlewares.depth.DepthMiddleware‘]

2018-09-23 11:53:06 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2018-09-23 11:53:06 [scrapy.core.engine] INFO: Spider opened

2018-09-23 11:53:06 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2018-09-23 11:53:06 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2018-09-23 11:53:07 [scrapy.core.engine] DEBUG: Crawled (404)

DOCTYPE html>

html lang="en">

head>

meta charset="UTF-8">

title>Quotes to Scrapetitle>

link rel="stylesheet" href="/static/bootstrap.min.css">

link rel="stylesheet" href="/static/main.css">

head>

body>

div class="container">

div class="row header-box">

很长,咱们就取这一小段吧。

5.4. 上传示例代码

$ git commit -m "init scrapy tutorial."

[master b1d6e1d] init scrapy tutorial.

9 files changed, 259 insertions(+)

create mode 100644 .idea/vcs.xml

create mode 100644 scrapy.cfg

create mode 100644 tutorial/__init__.py

create mode 100644 tutorial/items.py

create mode 100644 tutorial/middlewares.py

create mode 100644 tutorial/pipelines.py

create mode 100644 tutorial/settings.py

create mode 100644 tutorial/spiders/__init__.py

create mode 100644 tutorial/spiders/quotes_spider.py

$ git push

Counting objects: 14, done.

Delta compression using up to 4 threads.

Compressing objects: 100% (12/12), done.

Writing objects: 100% (14/14), 4.02 KiB | 293.00 KiB/s, done.

Total 14 (delta 0), reused 0 (delta 0)

To https://github.com/timscm/myscrapy.git

c7e93fc..b1d6e1d master -> master

文章标题:[TimLinux] scrapy 在Windows平台的安装

文章链接:http://soscw.com/index.php/essay/103024.html