flink源码编译(windows环境)

2021-07-10 11:10

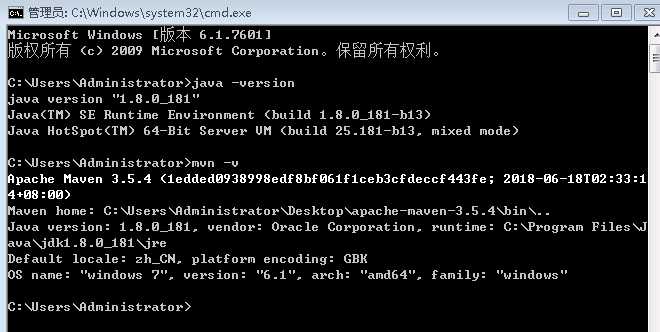

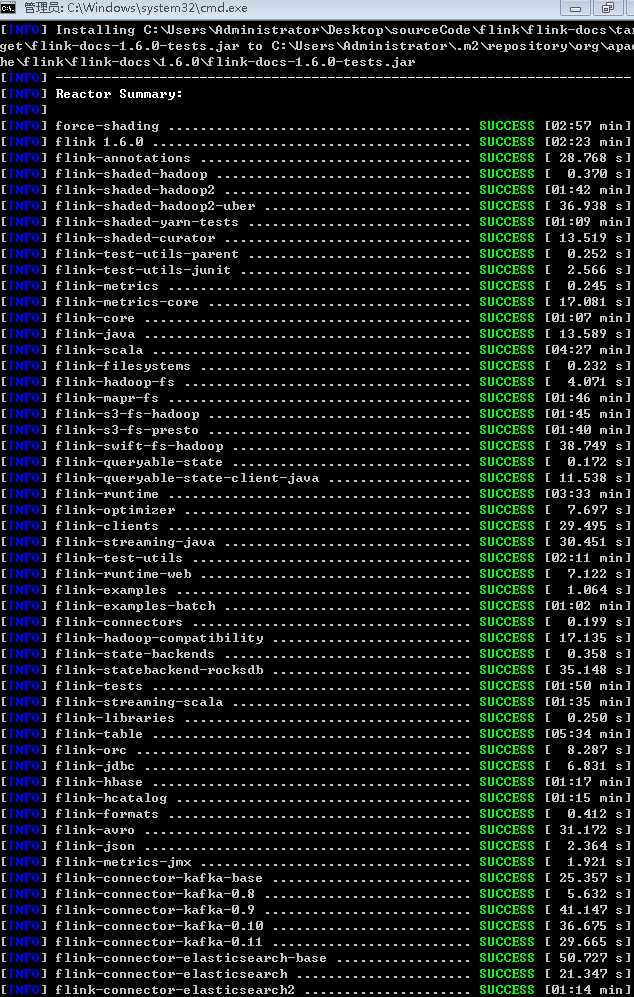

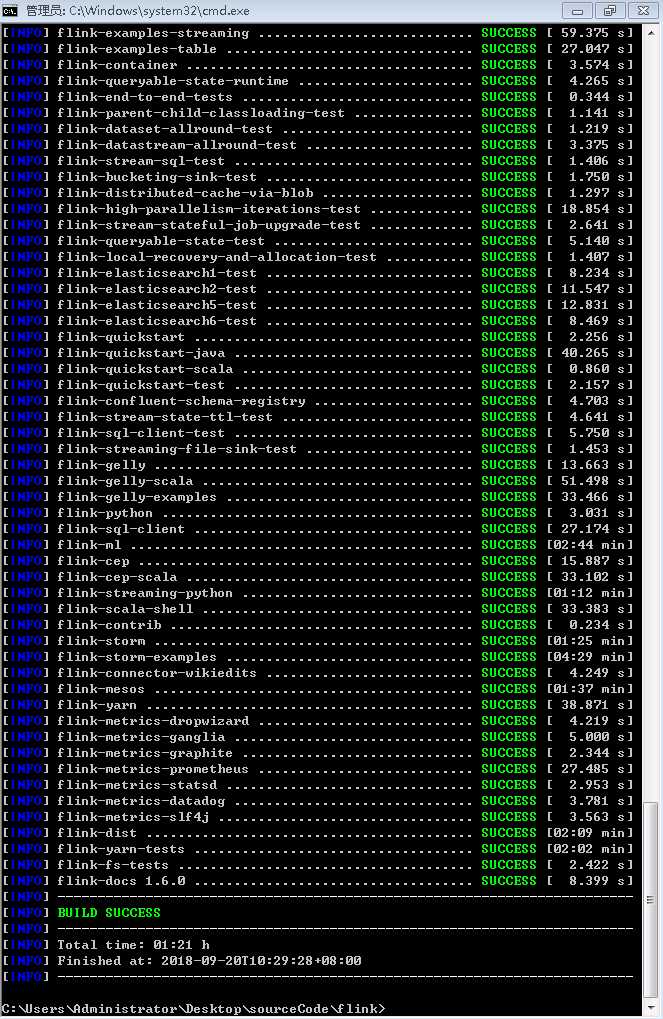

标签:end upstream nta vendor using row wro binary class 前言 最新开始捣鼓flink,fucking the code之前,编译是第一步。 编译环境 win7 java maven 编译步骤 https://ci.apache.org/projects/flink/flink-docs-release-1.6/start/building.html 官方文档搞起,如下: This page covers how to build Flink 1.6.1 from sources. In order to build Flink you need the source code. Either download the source of a release or clone the git repository. In addition you need Maven 3 and a JDK (Java Development Kit). Flink requires at least Java 8 to build. NOTE: Maven 3.3.x can build Flink, but will not properly shade away certain dependencies. Maven 3.2.5 creates the libraries properly. To build unit tests use Java 8u51 or above to prevent failures in unit tests that use the PowerMock runner. To clone from git, enter: The simplest way of building Flink is by running: This instructs Maven ( To speed up the build you can skip tests, QA plugins, and JavaDocs: The default build adds a Flink-specific JAR for Hadoop 2, to allow using Flink with HDFS and YARN. Flink shades away some of the libraries it uses, in order to avoid version clashes with user programs that use different versions of these libraries. Among the shaded libraries are Google Guava, Asm, Apache Curator, Apache HTTP Components, Netty, and others. The dependency shading mechanism was recently changed in Maven and requires users to build Flink slightly differently, depending on their Maven version: Maven 3.0.x, 3.1.x, and 3.2.x It is sufficient to call Maven 3.3.x The build has to be done in two steps: First in the base directory, then in the distribution project: Note: To check your Maven version, run Back to top Info Most users do not need to do this manually. The download page contains binary packages for common Hadoop versions. Flink has dependencies to HDFS and YARN which are both dependencies from Apache Hadoop. There exist many different versions of Hadoop (from both the upstream project and the different Hadoop distributions). If you are using a wrong combination of versions, exceptions can occur. Hadoop is only supported from version 2.4.0 upwards. You can also specify a specific Hadoop version to build against: To build Flink against a vendor specific Hadoop version, issue the following command: The Back to top Info Users that purely use the Java APIs and libraries can ignore this section. Flink has APIs, libraries, and runtime modules written in Scala. Users of the Scala API and libraries may have to match the Scala version of Flink with the Scala version of their projects (because Scala is not strictly backwards compatible). Flink 1.4 currently builds only with Scala version 2.11. We are working on supporting Scala 2.12, but certain breaking changes in Scala 2.12 make this a more involved effort. Please check out this JIRA issue for updates. Back to top If your home directory is encrypted you might encounter a The workaround is to add: in the compiler configuration of the 编译结果 flink源码编译(windows环境) 标签:end upstream nta vendor using row wro binary class 原文地址:https://www.cnblogs.com/felixzh/p/9685529.html

Building Flink from Source

Build Flink

git clone https://github.com/apache/flinkmvn clean install -DskipTestsmvn) to first remove all existing builds (clean) and then create a new Flink binary (install).mvn clean install -DskipTests -DfastDependency Shading

mvn clean install -DskipTests in the root directory of Flink code base.mvn clean install -DskipTests

cd flink-dist

mvn clean installmvn --version.Hadoop Versions

mvn clean install -DskipTests -Dhadoop.version=2.6.1Vendor-specific Versions 指定hadoop发行商

mvn clean install -DskipTests -Pvendor-repos -Dhadoop.version=2.6.1-cdh5.0.0-Pvendor-repos activates a Maven build profile that includes the repositories of popular Hadoop vendors such as Cloudera, Hortonworks, or MapR.Scala Versions

Encrypted File Systems

java.io.IOException: File name too long exception. Some encrypted file systems, like encfs used by Ubuntu, do not allow long filenames, which is the cause of this error.

-Xmax-classfile-name

128

pom.xml file of the module causing the error. For example, if the error appears in the flink-yarn module, the above code should be added under the scala-maven-plugin. See this issue for more information.

文章标题:flink源码编译(windows环境)

文章链接:http://soscw.com/index.php/essay/103211.html