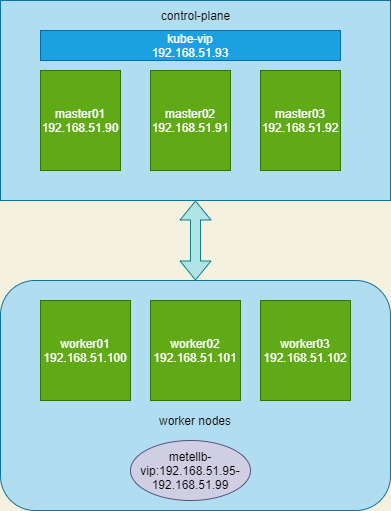

使用kube-vip搭建高可用kubernetes集群,并结合metallb作为worker节点的LB

2021-07-23 16:55

标签:oca proxy arp lock rsa load ionic nod environ 本篇内容参考: kube-vip可以提供控制平面节点提供原k8s原生的ha负载均衡,用来替换原来的haproxy和keepalived 系统版本: ubuntu18.04 参考文档:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/ 这里选择使用docker sudo apt-get update sudo apt-get install -y apt-transport-https ca-certificates curl gnupg lsb-release curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" sudo apt update apt-cache showpkg apt install -y docker-ce=5:19.03.153-0ubuntu-bionic docker-ce-cli=5:19.03.153-0ubuntu-bionic docker version sudo systemctl enable docker --now sudo ufw disable sudo swapoff -a 在 Linux 中,nftables 当前可以作为内核 iptables 子系统的替代品。 iptables 工具可以充当兼容性层,其行为类似于 iptables 但实际上是在配置 nftables。 nftables 后端与当前的 kubeadm 软件包不兼容:它会导致重复防火墙规则并破坏 kube-proxy。 如果您系统的 iptables 工具使用 nftables 后端,则需要把 iptables 工具切换到“旧版”模式来避免这些问题。 默认情况下,至少在 Debian 10 (Buster)、Ubuntu 19.04、Fedora 29 和较新的发行版本中会出现这种问题。RHEL 8 不支持切换到旧版本模式,因此与当前的 kubeadm 软件包不兼容。 sudo hostnamectl set-hostname curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - sudo apt install kubelet=1.20.0-00 kubeadm=1.20.0-00 kubectl=1.20.0-00 -y kubectl version kubeadm version kubelet version apt install -y bash-completion source /usr/share/bash-completion/bash_completion echo ‘source >~/.bashrc kubectl completion bash >/etc/bash_completion.d/kubectl echo ‘alias k=kubectl‘ >>~/.bashrc 完成系统基础配置,下一步开始部署带kube-vip的k8s集群 参考: https://kube-vip.io/hybrid/static/ && https://kube-vip.io/hybrid/ kubeadm init参数参考:https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-init/ kubeadm init -apiserver-advertise-address 192.168.51.90 --apiserver-bind-port 6443 --pod-network-cidr 172.16.0.0/16 --upload-certs --image-repository registry.aliyuncs.com/google_containers 这里的--pod-network-cidr最好是直接规划好。并设置,不然后续还需要修改/etc/kubernetes/manifests/kube-controller-manager.yaml这个配置文件添加 看到以下提示表示安装完成: 检查网络是否生效 kubectl cluster-info dump | grep -m 1 cluster-cidr 通过系统初始化之后,执行master01节点执行kubeadm init生成的以下内容: kubeadm join 192.168.51.93:6443 --token 7fjfc3.fgxtoz2mxbe87nbg 执行完成后则两个节点已经添加到了集群. 因为kube-vip是静态pod安装的需要在master02和master03节点的/etc/kubernetes/manifests/目录下添加kube-vip.yaml文件 master02: master03: 文件添加完成后理论上kubelet会检查文件夹是否有变化,自动生成pod,若是没有生成则使用命令: kubectl apply -f /etc/kubernetes/manifests/kube-vipo.yaml 至此master节点安装完毕,不过集群应该还是notready的状态,还需要安装CNI插件"可用有flannel,calico,weave"等插件 此步骤非常简单直接执行官方的一个yml文档就好了 https://raw.githubusercontent.com/coreos/flannel/ 参看最新版本:https://github.com/flannel-io/flannel/tags kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/v0.14.0/Documentation/kube-flannel.yml 完成flannel安装后,集群节点状态变成为ready kubectl taint node --all node-role.kubernetes.io/master- 参考文档:https://metallb.universe.tf/installation/ kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.10.2/manifests/namespace.yaml kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.10.2/manifests/metallb.yaml 参考文档:https://metallb.universe.tf/configuration/ 本次实验选择的是二层配置,修改下addresses的地址范围就行了 kubectl create deploy nginx --image nginx kubectl expose deploy --port 80 nginx --type LoadBalancer 可以发现svc里面的service/nginx获取到了external-IP的地址192.168.51.95 学习交流可以关注我的公众号 使用kube-vip搭建高可用kubernetes集群,并结合metallb作为worker节点的LB 标签:oca proxy arp lock rsa load ionic nod environ 原文地址:https://www.cnblogs.com/lzj-blog/p/14966342.htmlCNCF: https://mp.weixin.qq.com/s?__biz=MzI5ODk5ODI4Nw==&mid=2247502809&idx=4&sn=df581ea3008dffdd2cc3a035934a4ffd&chksm=ec9fc4b9dbe84daf224aa558315690df554fe87d60f482f8d318417b069a1a51c704ca576fc2&mpshare=1&scene=1&srcid=06176PXuhkB6GEAI9yteZNWt&sharer_sharetime=1623904467161&sharer_shareid=65c2b33f0edcd044f978b8c04e432df8&version=3.1.8.3108&platform=win#rd

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

https://kube-vip.io/hybrid/static/ && https://kube-vip.io/hybrid/

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-init/*

https://metallb.universe.tf/installation/*

https://metallb.universe.tf/configuration/*

kube-vip有两种模式

? 1.ARP第二层

? 2.BGP

本次kube-vip作为静态pod的方式提供服务,需要在所有master节点的/etc/kubernetes/manifests目录下提供kube-vip.yaml的文件

并且在测试中ARP的延迟在0.2-0.4ms之间,BGP测试在0.02-0.06ms之间,故模式选择BGP的方式简单拓扑

K8S安装系统准备

基础架构

kube-vip 版本:docker.io/plndr/kube-vip:0.3.1

docker 版本: 19.03.15

CNI版本: flannel v0.14.0

metallb版本:v0.10.2

节点

地址

kube-vip

192.168.51.93

master01

192.168.51.90

master02

192.168.51.91

master03

192.168.51.92

metallb-vip

192.168.51.95-192.168.51.99

系统基础配置

安装运行时

卸载旧版本

sudo apt-get remove docker docker-engine docker.io containerd runc -y

安装依赖包

添加官方GPG秘钥

添加阿里云的稳定存储库

查看镜像版本号及安装指定版本docker-ce

检查是否安装成功

root@master01:~# docker version

Client: Docker Engine - Community

Version: 19.03.15

API version: 1.40

Go version: go1.13.15

Git commit: 99e3ed8919

Built: Sat Jan 30 03:16:51 2021

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.15

API version: 1.40 (minimum version 1.12)

Go version: go1.13.15

Git commit: 99e3ed8919

Built: Sat Jan 30 03:15:20 2021

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.4.6

GitCommit: d71fcd7d8303cbf684402823e425e9dd2e99285d

runc:

Version: 1.0.0-rc95

GitCommit: b9ee9c6314599f1b4a7f497e1f1f856fe433d3b7

docker-init:

Version: 0.18.0

GitCommit: fec3683

修改docker配置

#kubernetes 官方建议 docker 驱动采用 systemd,当然可以不修改,只是kubeadm init时会有warning提示

# 设置 Docker daemon

cat

基础配置

关闭防火墙和swap

将swap那行注释

cat /etc/fstab

# /etc/fstab: static file system information.

#

# Use ‘blkid‘ to print the universally unique identifier for a

# device; this may be used with UUID= as a more robust way to name devices

# that works even if disks are added and removed. See fstab(5).

#

#

使iptables看到桥接流量

cat 确保iptables工具不适用nftalbes后端

update-alternatives --set iptables /usr/sbin/iptables-legacy

update-alternatives --set ip6tables /usr/sbin/ip6tables-legacy

update-alternatives --set arptables /usr/sbin/arptables-legacy

update-alternatives --set ebtables /usr/sbin/ebtables-legacy

修改主机名更新hosts

sudo vi /etc/hosts

192.168.51.90 master01

192.168.51.91 master02

192.168.51.92 master03

192.168.51.93 kube-vip

安装kubeadm,kubectl,kubelet

cat /etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

查看是否安装成功

添加命令自动补全

修改kubectl别名

echo ‘complete -F __start_kubectl k‘ >>~/.bashrc初始化k8smaster节点及kube-vip部署

初始化master01节点

1.master01添加kube-vip的配置文件

export VIP=192.168.51.93

export INTERFACE=lo

docker pull docker.io/plndr/kube-vip:0.3.1

alias kube-vip="docker run --network host --rm plndr/kube-vip:0.3.1"

kube-vip manifest pod --interface $INTERFACE --vip $VIP --controlplane --services --bgp --localAS 65000 --bgpRouterID 192.168.51.90 --bgppeers 192.168.51.91:65000::false,192.168.51.92:65000::false |tee /etc/kubernetes/manifests/kube-vip.yaml

2. 使用kubeadm初始化master01节点

--allocate-node-cidrs=true --cluster-cidr=10.244.0.0/16此配置[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.51.93:6443 --token 7fjfc3.fgxtoz2mxbe87nbg --discovery-token-ca-cert-hash sha256:2f98bba56738c501d4229a11226119da8b8e568a5ef7679091cdf614128c855e --control-plane --certificate-key b8bf4f98b5403555eff663acb3d6b7374703fc37b4d199ccadde08aabe36ba6f

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.51.93:6443 --token 7fjfc3.fgxtoz2mxbe87nbg --discovery-token-ca-cert-hash sha256:2f98bba56738c501d4229a11226119da8b8e568a5ef7679091cdf614128c855e

添加master02和master03节点

--discovery-token-ca-cert-hash sha256:2f98bba56738c501d4229a11226119da8b8e568a5ef7679091cdf614128c855e

--control-plane --certificate-key b8bf4f98b5403555eff663acb3d6b7374703fc37b4d199ccadde08aabe36ba6fexport VIP=192.168.51.93

export INTERFACE=lo

docker pull docker.io/plndr/kube-vip:0.3.1

alias kube-vip="docker run --network host --rm plndr/kube-vip:0.3.1"

kube-vip manifest pod --interface $INTERFACE --vip $VIP --controlplane --services --bgp --localAS 65000 --bgpRouterID 192.168.51.91 --bgppeers 192.168.51.90:65000::false,192.168.51.92:65000::false |tee /etc/kubernetes/manifests/kube-vip.yaml

export VIP=192.168.51.93

export INTERFACE=lo

docker pull docker.io/plndr/kube-vip:0.3.1

alias kube-vip="docker run --network host --rm plndr/kube-vip:0.3.1"

kube-vip manifest pod --interface $INTERFACE --vip $VIP --controlplane --services --bgp --localAS 65000 --bgpRouterID 192.168.51.92 --bgppeers 192.168.51.90:65000::false,192.168.51.91:65000::false |tee /etc/kubernetes/manifests/kube-vip.yaml

添加CNI插件

本次选择最新的版本v0.14.0

metallb部署

部署

1.增加namespaces --metallb-system

kind: Namespace

metadata:

name: metallb-system

labels:

app: metallb

2.添加DaemonSet以及权限

kind: PodSecurityPolicy

metadata:

labels:

app: metallb

name: controller

namespace: metallb-system

spec:

allowPrivilegeEscalation: false

allowedCapabilities: []

allowedHostPaths: []

defaultAddCapabilities: []

defaultAllowPrivilegeEscalation: false

fsGroup:

ranges:

- max: 65535

min: 1

rule: MustRunAs

hostIPC: false

hostNetwork: false

hostPID: false

privileged: false

readOnlyRootFilesystem: true

requiredDropCapabilities:

- ALL

runAsUser:

ranges:

- max: 65535

min: 1

rule: MustRunAs

seLinux:

rule: RunAsAny

supplementalGroups:

ranges:

- max: 65535

min: 1

rule: MustRunAs

volumes:

- configMap

- secret

- emptyDir

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

labels:

app: metallb

name: speaker

namespace: metallb-system

spec:

allowPrivilegeEscalation: false

allowedCapabilities:

- NET_RAW

allowedHostPaths: []

defaultAddCapabilities: []

defaultAllowPrivilegeEscalation: false

fsGroup:

rule: RunAsAny

hostIPC: false

hostNetwork: true

hostPID: false

hostPorts:

- max: 7472

min: 7472

- max: 7946

min: 7946

privileged: true

readOnlyRootFilesystem: true

requiredDropCapabilities:

- ALL

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- configMap

- secret

- emptyDir

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: metallb

name: controller

namespace: metallb-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: metallb

name: speaker

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app: metallb

name: metallb-system:controller

rules:

- apiGroups:

- ‘‘

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ‘‘

resources:

- services/status

verbs:

- update

- apiGroups:

- ‘‘

resources:

- events

verbs:

- create

- patch

- apiGroups:

- policy

resourceNames:

- controller

resources:

- podsecuritypolicies

verbs:

- use

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app: metallb

name: metallb-system:speaker

rules:

- apiGroups:

- ‘‘

resources:

- services

- endpoints

- nodes

verbs:

- get

- list

- watch

- apiGroups: ["discovery.k8s.io"]

resources:

- endpointslices

verbs:

- get

- list

- watch

- apiGroups:

- ‘‘

resources:

- events

verbs:

- create

- patch

- apiGroups:

- policy

resourceNames:

- speaker

resources:

- podsecuritypolicies

verbs:

- use

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app: metallb

name: config-watcher

namespace: metallb-system

rules:

- apiGroups:

- ‘‘

resources:

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app: metallb

name: pod-lister

namespace: metallb-system

rules:

- apiGroups:

- ‘‘

resources:

- pods

verbs:

- list

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app: metallb

name: controller

namespace: metallb-system

rules:

- apiGroups:

- ‘‘

resources:

- secrets

verbs:

- create

- apiGroups:

- ‘‘

resources:

- secrets

resourceNames:

- memberlist

verbs:

- list

- apiGroups:

- apps

resources:

- deployments

resourceNames:

- controller

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app: metallb

name: metallb-system:controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: metallb-system:controller

subjects:

- kind: ServiceAccount

name: controller

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app: metallb

name: metallb-system:speaker

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: metallb-system:speaker

subjects:

- kind: ServiceAccount

name: speaker

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app: metallb

name: config-watcher

namespace: metallb-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: config-watcher

subjects:

- kind: ServiceAccount

name: controller

- kind: ServiceAccount

name: speaker

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app: metallb

name: pod-lister

namespace: metallb-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: pod-lister

subjects:

- kind: ServiceAccount

name: speaker

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app: metallb

name: controller

namespace: metallb-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: controller

subjects:

- kind: ServiceAccount

name: controller

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: metallb

component: speaker

name: speaker

namespace: metallb-system

spec:

selector:

matchLabels:

app: metallb

component: speaker

template:

metadata:

annotations:

prometheus.io/port: ‘7472‘

prometheus.io/scrape: ‘true‘

labels:

app: metallb

component: speaker

spec:

containers:

- args:

- --port=7472

- --config=config

env:

- name: METALLB_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: METALLB_HOST

valueFrom:

fieldRef:

fieldPath: status.hostIP

- name: METALLB_ML_BIND_ADDR

valueFrom:

fieldRef:

fieldPath: status.podIP

# needed when another software is also using memberlist / port 7946

# when changing this default you also need to update the container ports definition

# and the PodSecurityPolicy hostPorts definition

#- name: METALLB_ML_BIND_PORT

# value: "7946"

- name: METALLB_ML_LABELS

value: "app=metallb,component=speaker"

- name: METALLB_ML_SECRET_KEY

valueFrom:

secretKeyRef:

name: memberlist

key: secretkey

image: quay.io/metallb/speaker:v0.10.2

name: speaker

ports:

- containerPort: 7472

name: monitoring

- containerPort: 7946

name: memberlist-tcp

- containerPort: 7946

name: memberlist-udp

protocol: UDP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_RAW

drop:

- ALL

readOnlyRootFilesystem: true

hostNetwork: true

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: speaker

terminationGracePeriodSeconds: 2

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: metallb

component: controller

name: controller

namespace: metallb-system

spec:

revisionHistoryLimit: 3

selector:

matchLabels:

app: metallb

component: controller

template:

metadata:

annotations:

prometheus.io/port: ‘7472‘

prometheus.io/scrape: ‘true‘

labels:

app: metallb

component: controller

spec:

containers:

- args:

- --port=7472

- --config=config

env:

- name: METALLB_ML_SECRET_NAME

value: memberlist

- name: METALLB_DEPLOYMENT

value: controller

image: quay.io/metallb/controller:v0.10.2

name: controller

ports:

- containerPort: 7472

name: monitoring

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- all

readOnlyRootFilesystem: true

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 65534

serviceAccountName: controller

terminationGracePeriodSeconds: 0

3.配置configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.51.95-192.168.51.99

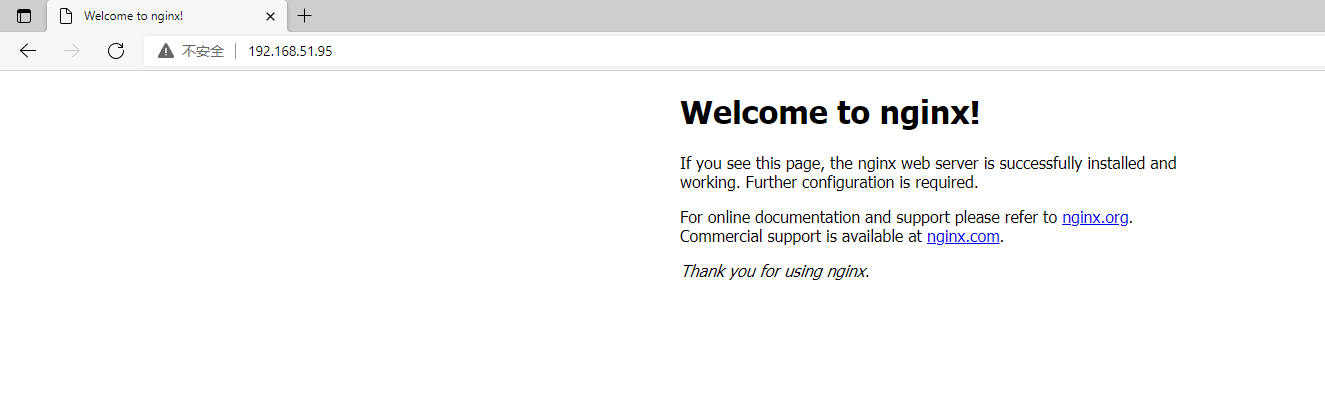

4.测试是否成功能获取到ip并访问

部署nginx

root@master01:~# kubectl create deploy nginx --image nginx

deployment.apps/nginx created

root@master01:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-22ppr 0/1 ContainerCreating 0 19s

root@master01:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-22ppr 1/1 Running 0 27s

部署LoadBalancer模式的expose

root@master01:~# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-22ppr 1/1 Running 0 2m11s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1

上一篇:Go 语言变量作用域

文章标题:使用kube-vip搭建高可用kubernetes集群,并结合metallb作为worker节点的LB

文章链接:http://soscw.com/index.php/essay/106869.html