在C#下使用TensorFlow.NET训练自己的数据集

2021-01-19 02:40

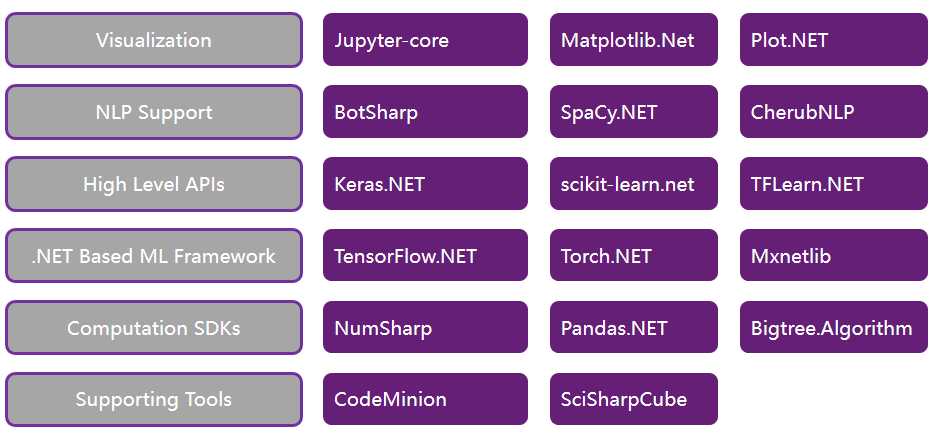

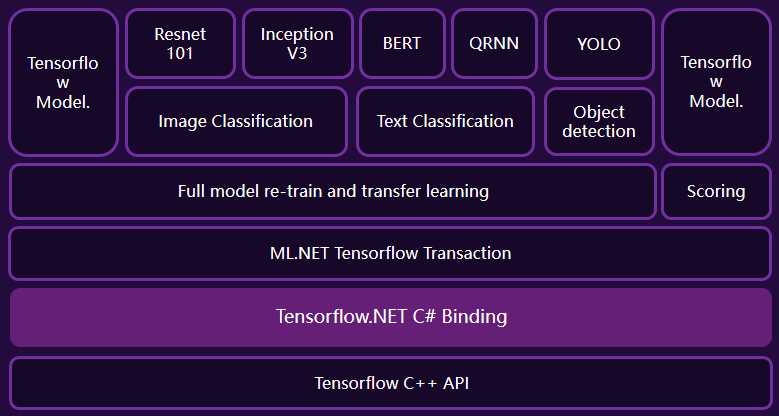

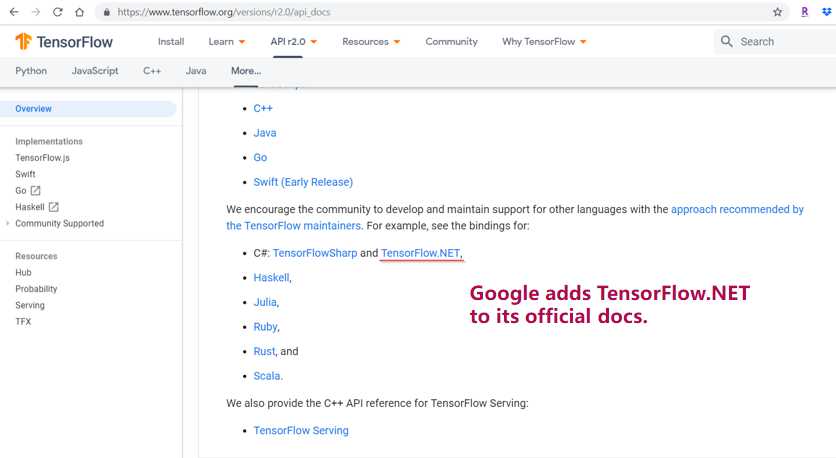

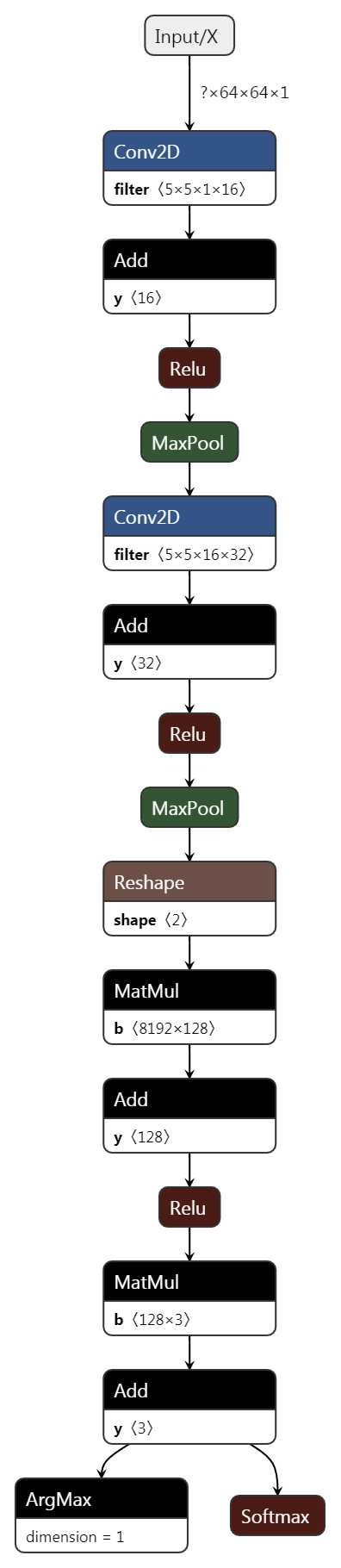

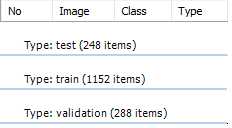

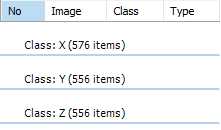

标签:com exp 开发者 检测 方法 import ESS epo ever 今天,我结合代码来详细介绍如何使用 SciSharp STACK 的 TensorFlow.NET 来训练CNN模型,该模型主要实现 图像的分类 ,可以直接移植该代码在 CPU 或 GPU 下使用,并针对你们自己本地的图像数据集进行训练和推理。TensorFlow.NET是基于 .NET Standard 框架的完整实现的TensorFlow,可以支持 SciSharp STACK:https://github.com/SciSharp TensorFlow.NET 是 SciSharp STACK 由于TensorFlow.NET在.NET平台的优秀性能,同时搭配SciSharp的NumSharp、SharpCV、Pandas.NET、Keras.NET、Matplotlib.Net等模块,可以完全脱离Python环境使用,目前已经被微软ML.NET官方的底层算法集成,并被谷歌写入TensorFlow官网教程推荐给全球开发者。 SciSharp 产品结构 微软 ML.NET底层集成算法 谷歌官方推荐.NET开发者使用 URL: https://www.tensorflow.org/versions/r2.0/api_docs 本文利用TensorFlow.NET构建简单的图像分类模型,针对工业现场的印刷字符进行单字符OCR识别,从工业相机获取原始大尺寸的图像,前期使用OpenCV进行图像预处理和字符分割,提取出单个字符的小图,送入TF进行推理,推理的结果按照顺序组合成完整的字符串,返回至主程序逻辑进行后续的生产线工序。 实际使用中,如果你们需要训练自己的图像,只需要把训练的文件夹按照规定的顺序替换成你们自己的图片即可。支持GPU或CPU方式,该项目的完整代码在GitHub如下: https://github.com/SciSharp/SciSharp-Stack-Examples/blob/master/src/TensorFlowNET.Examples/ImageProcessing/CnnInYourOwnData.cs 本项目的CNN模型主要由 2个卷积层&池化层 和 1个全连接层 组成,激活函数使用常见的Relu,是一个比较浅的卷积神经网络模型。其中超参数之一"学习率",采用了自定义的动态下降的学习率,后面会有详细说明。具体每一层的Shape参考下图: 为了模型测试的训练速度考虑,图像数据集主要节选了一小部分的OCR字符(X、Y、Z),数据集的特征如下: 分类数量:3 classes 【X/Y/Z】 图像尺寸:Width 64 × Height 64 图像通道:1 channel(灰度图) 数据集数量: train:X - 384pcs ; Y - 384pcs ; Z - 384pcs validation:X - 96pcs ; Y - 96pcs ; Z - 96pcs test:X - 96pcs ; Y - 96pcs ; Z - 96pcs 其它说明:数据集已经经过 随机 翻转/平移/缩放/镜像 等预处理进行增强 整体数据集情况如下图所示: .NET 框架:使用.NET Framework 4.7.2及以上,或者使用.NET CORE 2.2及以上 CPU 配置: Any CPU 或 X64 皆可 GPU 配置:需要自行配置好CUDA和环境变量,建议 CUDA v10.1,Cudnn v7.5 从NuGet安装必要的依赖项,主要是SciSharp相关的类库,如下图所示: 注意事项:尽量安装最新版本的类库,CV须使用 SciSharp 的 SharpCV 方便内部变量传递 引用命名空间,包括 NumSharp、Tensorflow 和 SharpCV ; ### 主逻辑: 准备数据 创建计算图 训练 预测 数据集地址:https://github.com/SciSharp/SciSharp-Stack-Examples/blob/master/data/data_CnnInYourOwnData.zip 数据集下载和解压代码 ( 部分封装的方法请参考 GitHub完整代码 ): 读取目录下的子文件夹名称,作为分类的字典,方便后面One-hot使用 从文件夹中读取train、validation、test的list,并随机打乱顺序。 读取目录 获得标签 随机乱序 Validation/Test数据集和标签一次性预先载入成NDArray格式。 构建CNN静态计算图,其中学习率每n轮Epoch进行1次递减。 Batch数据集的读取,采用了 SharpCV 的cv2.imread,可以直接读取本地图像文件至NDArray,实现CV和Numpy的无缝对接; 使用.NET的异步线程安全队列BlockingCollection 在训练模型的时候,我们需要将样本从硬盘读取到内存之后,才能进行训练。我们在会话中运行多个线程,并加入队列管理器进行线程间的文件入队出队操作,并限制队列容量,主线程可以利用队列中的数据进行训练,另一个线程进行本地文件的IO读取,这样可以实现数据的读取和模型的训练是异步的,降低训练时间。 模型的保存,可以选择每轮训练都保存,或最佳训练模型保存 训练完成的模型对test数据集进行预测,并统计准确率 计算图中增加了一个提取预测结果Top-1的概率的节点,最后测试集预测的时候可以把详细的预测数据进行输出,方便实际工程中进行调试和优化。 本文主要是.NET下的TensorFlow在实际工业现场视觉检测项目中的应用,使用SciSharp的TensorFlow.NET构建了简单的CNN图像分类模型,该模型包含输入层、卷积与池化层、扁平化层、全连接层和输出层,这些层都是CNN分类模型的必要的层,针对工业现场的实际图像进行了分类,分类准确性较高。 完整代码可以直接用于大家自己的数据集进行训练,已经在工业现场经过大量测试,可以在GPU或CPU环境下运行,只需要更换tensorflow.dll文件即可实现训练环境的切换。 同时,训练完成的模型文件,可以使用 “CKPT+Meta” 或 冻结成“PB” 2种方式,进行现场的部署,模型部署和现场应用推理可以全部在.NET平台下进行,实现工业现场程序的无缝对接。摆脱了以往Python下 需要通过Flask搭建服务器进行数据通讯交互 的方式,现场部署应用时无需配置Python和TensorFlow的环境【无需对工业现场的原有PC升级安装一大堆环境】,整个过程全部使用传统的.NET的DLL引用的方式。 欢迎广大.NET开发者们加入TensorFlow.NET社区,SciSharp STACK QQ群:461855582 ,或有任何问题可以直接联系我的个人QQ:50705111 。 SciSharp STACK QQ群: 我的个人QQ: 在C#下使用TensorFlow.NET训练自己的数据集 标签:com exp 开发者 检测 方法 import ESS epo ever 在C#下使用TensorFlow.NET训练自己的数据集

.NET Framework 或 .NET CORE , TensorFlow.NET 为广大.NET开发者提供了完美的机器学习框架选择。什么是TensorFlow.NET?

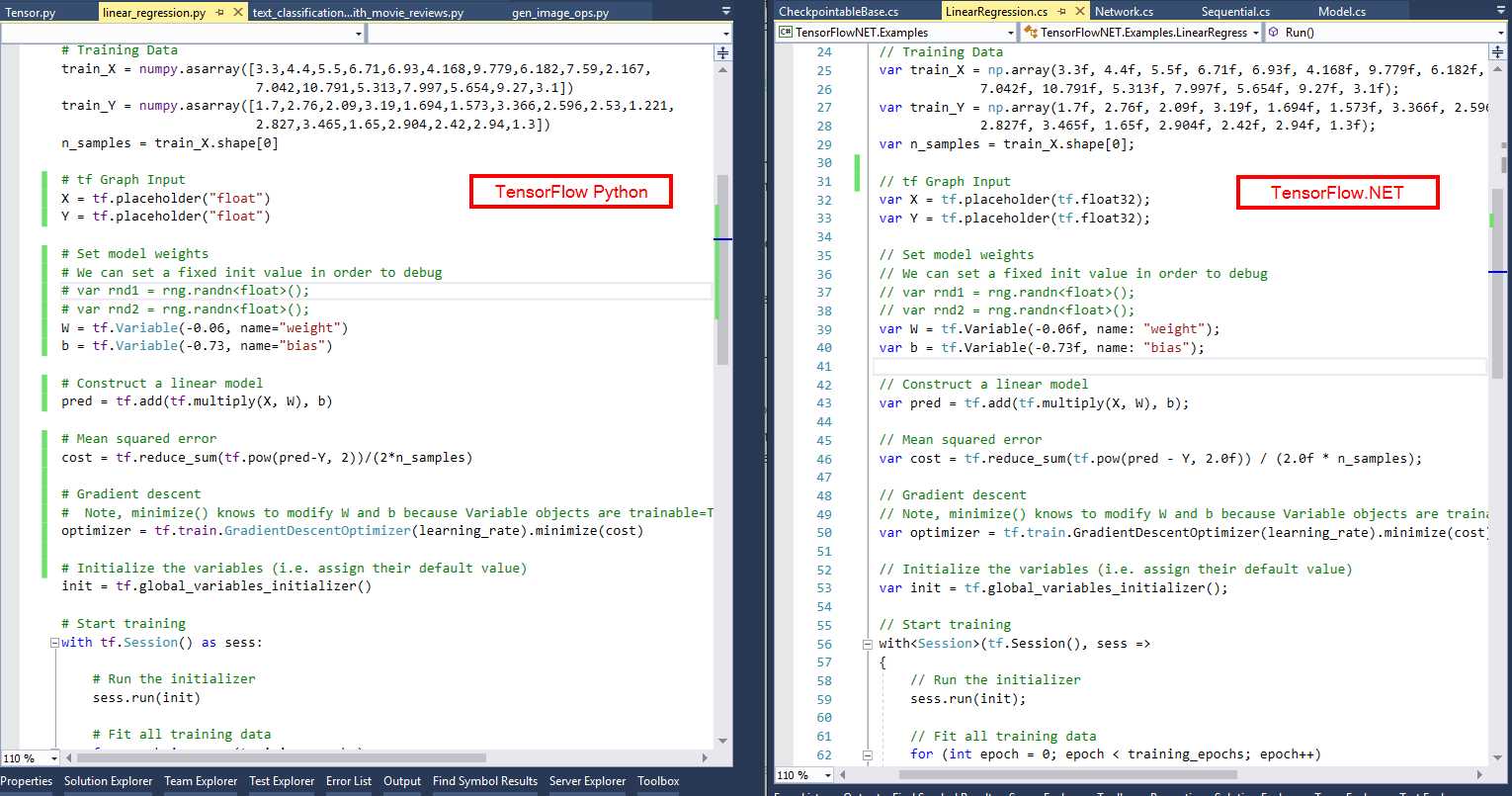

![]() 开源社区团队的贡献,其使命是打造一个完全属于.NET开发者自己的机器学习平台,特别对于C#开发人员来说,是一个“0”学习成本的机器学习平台,该平台集成了大量API和底层封装,力图使TensorFlow的Python代码风格和编程习惯可以无缝移植到.NET平台,下图是同样TF任务的Python实现和C#实现的语法相似度对比,从中读者基本可以略窥一二。

开源社区团队的贡献,其使命是打造一个完全属于.NET开发者自己的机器学习平台,特别对于C#开发人员来说,是一个“0”学习成本的机器学习平台,该平台集成了大量API和底层封装,力图使TensorFlow的Python代码风格和编程习惯可以无缝移植到.NET平台,下图是同样TF任务的Python实现和C#实现的语法相似度对比,从中读者基本可以略窥一二。

项目说明

模型介绍

数据集说明

代码说明

环境设置

类库和命名空间引用

using NumSharp;

using NumSharp.Backends;

using NumSharp.Backends.Unmanaged;

using SharpCV;

using System;

using System.Collections;

using System.Collections.Generic;

using System.Diagnostics;

using System.IO;

using System.Linq;

using System.Runtime.CompilerServices;

using Tensorflow;

using static Tensorflow.Binding;

using static SharpCV.Binding;

using System.Collections.Concurrent;

using System.Threading.Tasks;

主逻辑结构

public bool Run()

{

PrepareData();

BuildGraph();

?

using (var sess = tf.Session())

{

Train(sess);

Test(sess);

}

?

TestDataOutput();

?

return accuracy_test > 0.98;

?

}

数据集载入

数据集下载和解压

string url = "https://github.com/SciSharp/SciSharp-Stack-Examples/blob/master/data/data_CnnInYourOwnData.zip";

Directory.CreateDirectory(Name);

Utility.Web.Download(url, Name, "data_CnnInYourOwnData.zip");

Utility.Compress.UnZip(Name + "\\data_CnnInYourOwnData.zip", Name);

字典创建

private void FillDictionaryLabel(string DirPath)

{

string[] str_dir = Directory.GetDirectories(DirPath, "*", SearchOption.TopDirectoryOnly);

int str_dir_num = str_dir.Length;

if (str_dir_num > 0)

{

Dict_Label = new Dictionary

文件List读取和打乱

ArrayFileName_Train = Directory.GetFiles(Name + "\\train", "*.*", SearchOption.AllDirectories);

ArrayLabel_Train = GetLabelArray(ArrayFileName_Train);

?

ArrayFileName_Validation = Directory.GetFiles(Name + "\\validation", "*.*", SearchOption.AllDirectories);

ArrayLabel_Validation = GetLabelArray(ArrayFileName_Validation);

?

ArrayFileName_Test = Directory.GetFiles(Name + "\\test", "*.*", SearchOption.AllDirectories);

ArrayLabel_Test = GetLabelArray(ArrayFileName_Test);

private Int64[] GetLabelArray(string[] FilesArray)

{

Int64[] ArrayLabel = new Int64[FilesArray.Length];

for (int i = 0; i )

{

string[] labels = FilesArray[i].Split(‘\\‘);

string label = labels[labels.Length - 2];

ArrayLabel[i] = Dict_Label.Single(k => k.Value == label).Key;

}

return ArrayLabel;

}

public (string[], Int64[]) ShuffleArray(int count, string[] images, Int64[] labels)

{

ArrayList mylist = new ArrayList();

string[] new_images = new string[count];

Int64[] new_labels = new Int64[count];

Random r = new Random();

for (int i = 0; i )

{

mylist.Add(i);

}

?

for (int i = 0; i )

{

int rand = r.Next(mylist.Count);

new_images[i] = images[(int)(mylist[rand])];

new_labels[i] = labels[(int)(mylist[rand])];

mylist.RemoveAt(rand);

}

print("shuffle array list: " + count.ToString());

return (new_images, new_labels);

}

部分数据集预先载入

private void LoadImagesToNDArray()

{

//Load labels

y_valid = np.eye(Dict_Label.Count)[new NDArray(ArrayLabel_Validation)];

y_test = np.eye(Dict_Label.Count)[new NDArray(ArrayLabel_Test)];

print("Load Labels To NDArray : OK!");

//Load Images

x_valid = np.zeros(ArrayFileName_Validation.Length, img_h, img_w, n_channels);

x_test = np.zeros(ArrayFileName_Test.Length, img_h, img_w, n_channels);

LoadImage(ArrayFileName_Validation, x_valid, "validation");

LoadImage(ArrayFileName_Test, x_test, "test");

print("Load Images To NDArray : OK!");

}

private void LoadImage(string[] a, NDArray b, string c)

{

for (int i = 0; i )

{

b[i] = ReadTensorFromImageFile(a[i]);

Console.Write(".");

}

Console.WriteLine();

Console.WriteLine("Load Images To NDArray: " + c);

}

private NDArray ReadTensorFromImageFile(string file_name)

{

using (var graph = tf.Graph().as_default())

{

var file_reader = tf.read_file(file_name, "file_reader");

var decodeJpeg = tf.image.decode_jpeg(file_reader, channels: n_channels, name: "DecodeJpeg");

var cast = tf.cast(decodeJpeg, tf.float32);

var dims_expander = tf.expand_dims(cast, 0);

var resize = tf.constant(new int[] { img_h, img_w });

var bilinear = tf.image.resize_bilinear(dims_expander, resize);

var sub = tf.subtract(bilinear, new float[] { img_mean });

var normalized = tf.divide(sub, new float[] { img_std });

using (var sess = tf.Session(graph))

{

return sess.run(normalized);

}

}

}

计算图构建

#region BuildGraph

public Graph BuildGraph()

{

var graph = new Graph().as_default();

tf_with(tf.name_scope("Input"), delegate

{

x = tf.placeholder(tf.float32, shape: (-1, img_h, img_w, n_channels), name: "X");

y = tf.placeholder(tf.float32, shape: (-1, n_classes), name: "Y");

});

var conv1 = conv_layer(x, filter_size1, num_filters1, stride1, name: "conv1");

var pool1 = max_pool(conv1, ksize: 2, stride: 2, name: "pool1");

var conv2 = conv_layer(pool1, filter_size2, num_filters2, stride2, name: "conv2");

var pool2 = max_pool(conv2, ksize: 2, stride: 2, name: "pool2");

var layer_flat = flatten_layer(pool2);

var fc1 = fc_layer(layer_flat, h1, "FC1", use_relu: true);

var output_logits = fc_layer(fc1, n_classes, "OUT", use_relu: false);

//Some important parameter saved with graph , easy to load later

var img_h_t = tf.constant(img_h, name: "img_h");

var img_w_t = tf.constant(img_w, name: "img_w");

var img_mean_t = tf.constant(img_mean, name: "img_mean");

var img_std_t = tf.constant(img_std, name: "img_std");

var channels_t = tf.constant(n_channels, name: "img_channels");

//learning rate decay

gloabl_steps = tf.Variable(0, trainable: false);

learning_rate = tf.Variable(learning_rate_base);

//create train images graph

tf_with(tf.variable_scope("LoadImage"), delegate

{

decodeJpeg = tf.placeholder(tf.@byte, name: "DecodeJpeg");

var cast = tf.cast(decodeJpeg, tf.float32);

var dims_expander = tf.expand_dims(cast, 0);

var resize = tf.constant(new int[] { img_h, img_w });

var bilinear = tf.image.resize_bilinear(dims_expander, resize);

var sub = tf.subtract(bilinear, new float[] { img_mean });

normalized = tf.divide(sub, new float[] { img_std }, name: "normalized");

});

tf_with(tf.variable_scope("Train"), delegate

{

tf_with(tf.variable_scope("Loss"), delegate

{

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels: y, logits: output_logits), name: "loss");

});

tf_with(tf.variable_scope("Optimizer"), delegate

{

optimizer = tf.train.AdamOptimizer(learning_rate: learning_rate, name: "Adam-op").minimize(loss, global_step: gloabl_steps);

});

tf_with(tf.variable_scope("Accuracy"), delegate

{

var correct_prediction = tf.equal(tf.argmax(output_logits, 1), tf.argmax(y, 1), name: "correct_pred");

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32), name: "accuracy");

});

tf_with(tf.variable_scope("Prediction"), delegate

{

cls_prediction = tf.argmax(output_logits, axis: 1, name: "predictions");

prob = tf.nn.softmax(output_logits, axis: 1, name: "prob");

});

});

return graph;

}

///

模型训练和模型保存

#region Train

public void Train(Session sess)

{

// Number of training iterations in each epoch

var num_tr_iter = (ArrayLabel_Train.Length) / batch_size;

var init = tf.global_variables_initializer();

sess.run(init);

var saver = tf.train.Saver(tf.global_variables(), max_to_keep: 10);

path_model = Name + "\\MODEL";

Directory.CreateDirectory(path_model);

float loss_val = 100.0f;

float accuracy_val = 0f;

var sw = new Stopwatch();

sw.Start();

foreach (var epoch in range(epochs))

{

print($"Training epoch: {epoch + 1}");

// Randomly shuffle the training data at the beginning of each epoch

(ArrayFileName_Train, ArrayLabel_Train) = ShuffleArray(ArrayLabel_Train.Length, ArrayFileName_Train, ArrayLabel_Train);

y_train = np.eye(Dict_Label.Count)[new NDArray(ArrayLabel_Train)];

//decay learning rate

if (learning_rate_step != 0)

{

if ((epoch != 0) && (epoch % learning_rate_step == 0))

{

learning_rate_base = learning_rate_base * learning_rate_decay;

if (learning_rate_base learning_rate_min; }

sess.run(tf.assign(learning_rate, learning_rate_base));

}

}

//Load local images asynchronously,use queue,improve train efficiency

BlockingCollectionint iter)> BlockC = new BlockingCollectionint iter)>(TrainQueueCapa);

Task.Run(() =>

{

foreach (var iteration in range(num_tr_iter))

{

var start = iteration * batch_size;

var end = (iteration + 1) * batch_size;

(NDArray x_batch, NDArray y_batch) = GetNextBatch(sess, ArrayFileName_Train, y_train, start, end);

BlockC.Add((x_batch, y_batch, iteration));

}

BlockC.CompleteAdding();

});

foreach (var item in BlockC.GetConsumingEnumerable())

{

sess.run(optimizer, (x, item.c_x), (y, item.c_y));

if (item.iter % display_freq == 0)

{

// Calculate and display the batch loss and accuracy

var result = sess.run(new[] { loss, accuracy }, new FeedItem(x, item.c_x), new FeedItem(y, item.c_y));

loss_val = result[0];

accuracy_val = result[1];

print("CNN:" + ($"iter {item.iter.ToString("000")}: Loss={loss_val.ToString("0.0000")}, Training Accuracy={accuracy_val.ToString("P")} {sw.ElapsedMilliseconds}ms"));

sw.Restart();

}

}

// Run validation after every epoch

(loss_val, accuracy_val) = sess.run((loss, accuracy), (x, x_valid), (y, y_valid));

print("CNN:" + "---------------------------------------------------------");

print("CNN:" + $"gloabl steps: {sess.run(gloabl_steps) },learning rate: {sess.run(learning_rate)}, validation loss: {loss_val.ToString("0.0000")}, validation accuracy: {accuracy_val.ToString("P")}");

print("CNN:" + "---------------------------------------------------------");

if (SaverBest)

{

if (accuracy_val > max_accuracy)

{

max_accuracy = accuracy_val;

saver.save(sess, path_model + "\\CNN_Best");

print("CKPT Model is save.");

}

}

else

{

saver.save(sess, path_model + string.Format("\\CNN_Epoch_{0}_Loss_{1}_Acc_{2}", epoch, loss_val, accuracy_val));

print("CKPT Model is save.");

}

}

Write_Dictionary(path_model + "\\dic.txt", Dict_Label);

}

private void Write_Dictionary(string path, Dictionary

测试集预测

public void Test(Session sess)

{

(loss_test, accuracy_test) = sess.run((loss, accuracy), (x, x_test), (y, y_test));

print("CNN:" + "---------------------------------------------------------");

print("CNN:" + $"Test loss: {loss_test.ToString("0.0000")}, test accuracy: {accuracy_test.ToString("P")}");

print("CNN:" + "---------------------------------------------------------");

(Test_Cls, Test_Data) = sess.run((cls_prediction, prob), (x, x_test));

}

private void TestDataOutput()

{

for (int i = 0; i )

{

Int64 real = ArrayLabel_Test[i];

int predict = (int)(Test_Cls[i]);

var probability = Test_Data[i, predict];

string result = (real == predict) ? "OK" : "NG";

string fileName = ArrayFileName_Test[i];

string real_str = Dict_Label[real];

string predict_str = Dict_Label[predict];

print((i + 1).ToString() + "|" + "result:" + result + "|" + "real_str:" + real_str + "|"

+ "predict_str:" + predict_str + "|" + "probability:" + probability.GetSingle().ToString() + "|"

+ "fileName:" + fileName);

}

}

总结

文章标题:在C#下使用TensorFlow.NET训练自己的数据集

文章链接:http://soscw.com/index.php/essay/43906.html