python入门 访问网站并将爬回来的数据保存为csv文件

2021-01-20 05:12

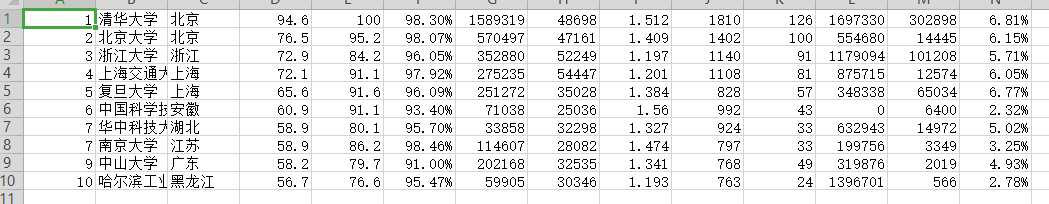

标签:amp ecb 属性 长度 mic encoding app range dig 一、用requeses库的个体()函数访问必应主页20次,打印返回状态,text内容,并且计算text()属性和content属性返回网页内容的长度 代码如下: 运行结果如下:显示结果太多 这里只截一部分 二、爬取的2019年中国最好大学的排名(这里只显示排名前十的学校了)并且把它保存为csv文件 代码如下: 代码显示结果如下: 打开文件: python入门 访问网站并将爬回来的数据保存为csv文件 标签:amp ecb 属性 长度 mic encoding app range dig 原文地址:https://www.cnblogs.com/slj-xt/p/12904617.html 1 import requests

2 def getHTMLText(url):

3 try:

4 for i in range(0,20): #访问20次

5 r = requests.get(url, timeout=30)

6 r.raise_for_status() #如果状态不是200,引发异常

7 r.encoding = ‘utf-8‘ #无论原来用什么编码,都改成utf-8

8 return r.status_code,r.text,r.content,len(r.text),len(r.content) ##返回状态,text和content内容,text()和content()网页的长度

9 except:

10 return ""

11 url = "https://cn.bing.com/?toHttps=1&redig=731C98468AFA474D85AECB7DB98B95D9"

12 print(getHTMLText(url))

1 import requests

2 import csv

3 import os

4 import codecs

5 from bs4 import BeautifulSoup

6 allUniv = []

7 def getHTMLText(url):

8 try:

9 r = requests.get(url, timeout=30)

10 r.raise_for_status()

11 r.encoding = ‘utf-8‘

12 return r.text

13 except:

14 return ""

15 def fillUnivList(soup):

16 data = soup.find_all(‘tr‘)

17 for tr in data:

18 ltd = tr.find_all(‘td‘)

19 if len(ltd)==0:

20 continue

21 singleUniv = []

22 for td in ltd:

23 singleUniv.append(td.string)

24 allUniv.append(singleUniv)

25 def printUnivList(num):

26 print("{:^4}{:^10}{:^5}{:^8}{:^10}".format("排名","学校名称","省市","总分","培养规模"))

27 for i in range(num):

28 u=allUniv[i]

29 print("{:^4}{:^10}{:^5}{:^8}{:^10}".format(u[0],u[1],u[2],u[3],u[6]))

30

31 def writercsv(save_road,num,title):

32 if os.path.isfile(save_road):

33 with open(save_road,‘a‘,newline=‘‘)as f:

34 csv_write=csv.writer(f,dialect=‘excel‘)

35 for i in range(num):

36 u=allUniv[i]

37 csv_write.writerow(u)

38 else:

39 with open(save_road,‘w‘,newline=‘‘)as f:

40 csv_write=csv.writer(f,dialect=‘excel‘)

41 csv_write.writerow(title)

42 for i in range(num):

43 u=allUniv[i]

44 csv_write.writerow(u)

45

46 title=["排名","学校名称","省市","总分","生源质量","培养结果","科研规模","科研质量","顶尖成果","顶尖人才","科技服务","产学研究合作","成果转化"]

47 save_road="F:\\python\csvData.csv"

48 def main():

49 url = ‘http://www.zuihaodaxue.cn/zuihaodaxuepaiming2019.html‘

50 html = getHTMLText(url)

51 soup = BeautifulSoup(html, "html.parser")

52 fillUnivList(soup)

53 printUnivList(10)

54 writercsv(‘F:\\python\csvData.csv‘,10,title)

55 main()

上一篇:Java类加载机制

下一篇:JavaScript内置对象

文章标题:python入门 访问网站并将爬回来的数据保存为csv文件

文章链接:http://soscw.com/index.php/essay/44385.html