Python第一条网络爬虫,爬取一个网页的内容

2021-01-23 04:13

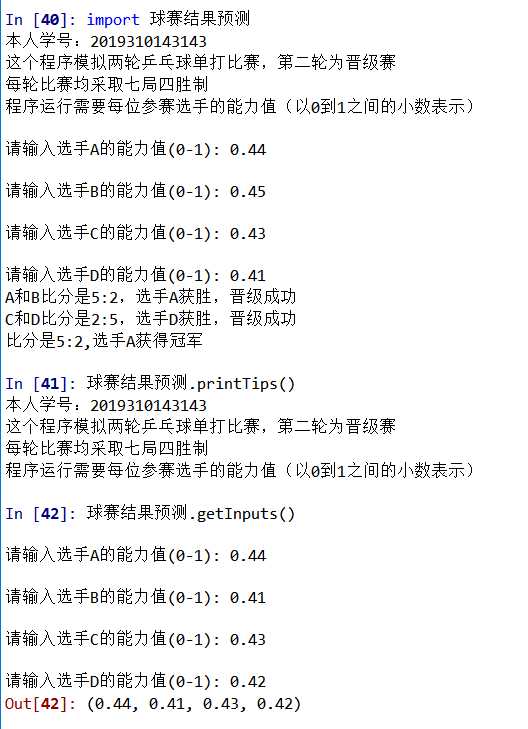

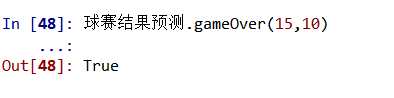

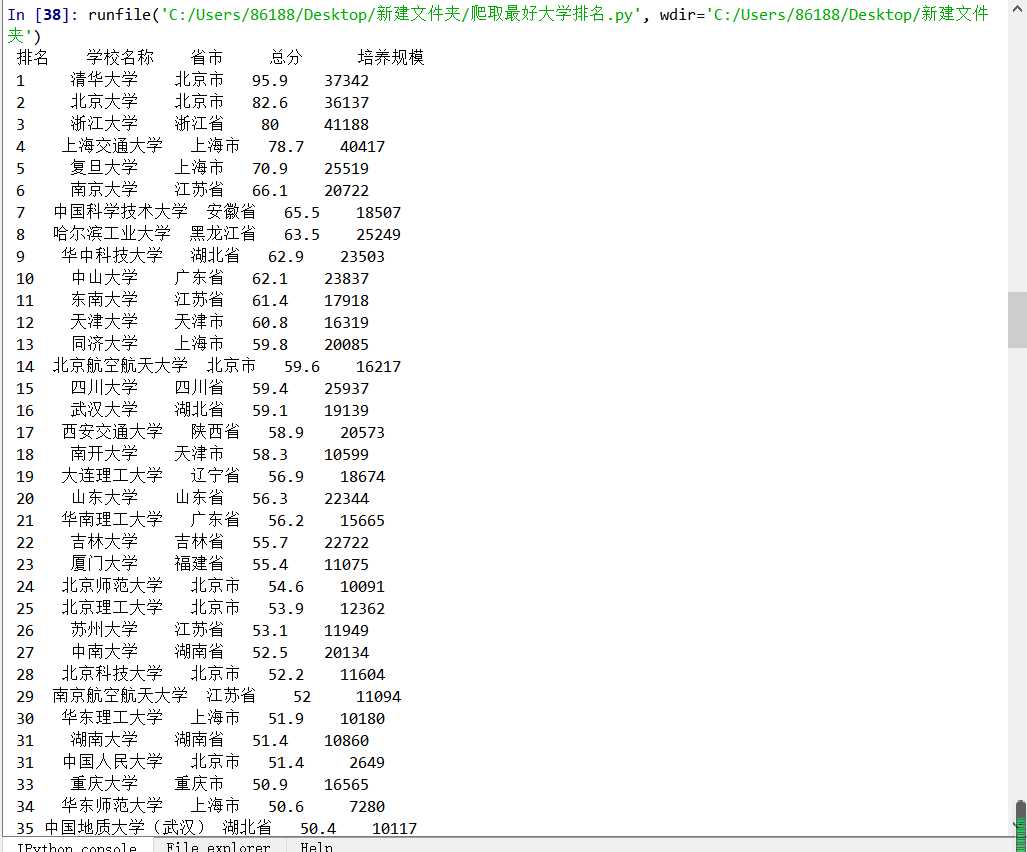

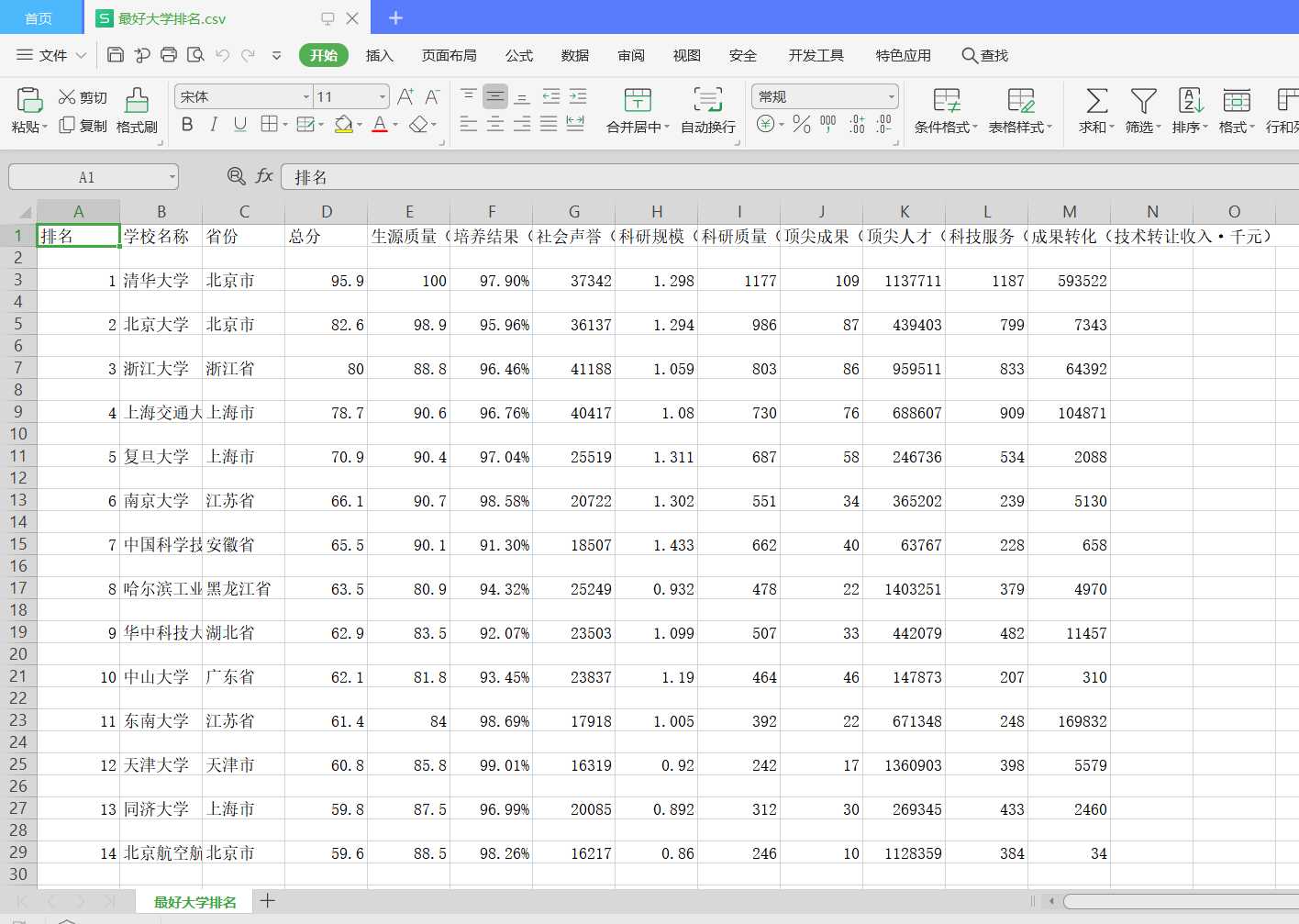

标签:python 服务 string idt pen 文件 end continue turn 三、HTML按要求打印网页。 四、爬取2016大学排名并存为CSV文件 Python第一条网络爬虫,爬取一个网页的内容 标签:python 服务 string idt pen 文件 end continue turn 原文地址:https://www.cnblogs.com/LSH1628340121/p/12885285.html一、球赛结果预测代码部分函数测试。

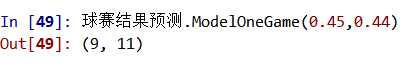

二、用requests库函数访问搜狗网页20次。 1 import requests

2 from bs4 import BeautifulSoup

3 def getHTMLText(self):

4 try:

5 r=requests.get(url,timeout=30)#如果状态不是200,引发异常

6 r.raise_for_status() #无论原来用什么编码,都改成utf-8

7 r.encoding =‘utf-8‘

8 soup=BeautifulSoup(r.text)

9 return r.text,r.status_code,len(r.text),r.encoding,len(soup.text)

10 except:

11 return ""

12 url="https://www.sogou.com"

13 print(getHTMLText(url))

14 for i in range(20):

15 print("第{}次访问".format(i+1))

16 print(getHTMLText(url))

1 DOCTYPE html>

2 html>

3 head>

4 meta charset="utf-8">

5 font size="10" color="purple">

6 title>我的第一个网页title>

7 xuehao>我的学号:2019310143143xuehao>

8 font>

9 head>

10 body>

11 p style="background-color:rgb(255,0,255)">

12 p>

13 font size="5" color="blue">

14 h1>欢迎来到你的三连我的心h1>

15 h2>好懒人就是我,我就是好懒人h2>

16 h3>此人很懒,无精彩内容可示h3>

17 h4>仅有鄙人陋见或许对你有用h4>

18 a href="https://home.cnblogs.com/u/LSH1628340121/">QAQ打开看看嘛QAQa>

19 p id="first">我的观众老爷们,给个三连。p>

20 font>

21 img src="F:\荷花.jpg" alt="荷花" width="900" height="800">

22 body>

23

24 table border="1">

25 tr>

26

27 td>点赞, 收藏,关注td>

28 tr>

29 tr>

30 td>投币1, 投币2td>

31 tr>

32 table>

33 html>

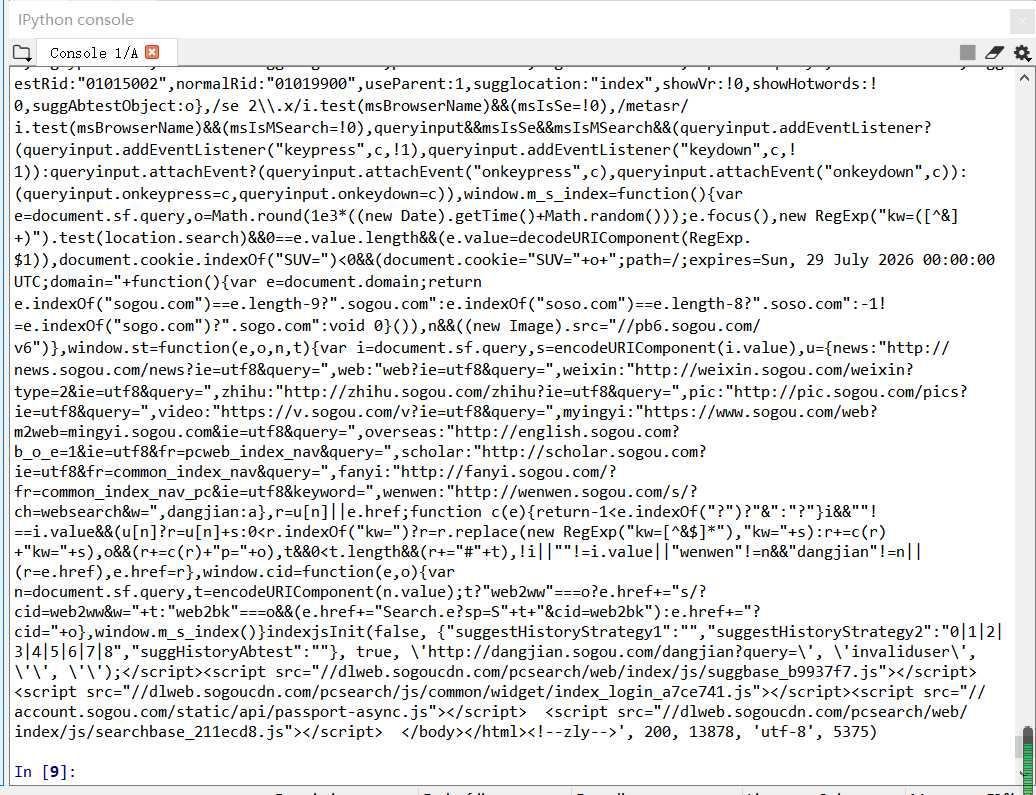

1 import requests

2 from bs4 import BeautifulSoup

3 import csv

4 allUniv=[]

5 def getHTMLText (url):

6 try:

7 r = requests.get(url,timeout=30)

8 r.raise_for_status()

9 r.encoding = ‘utf-8‘

10 return r.text

11 except:

12 return ""

13 def fillUnivList (soup):

14 data = soup.find_all(‘tr‘)

15 for tr in data:

16 ltd = tr.find_all(‘td‘)

17 if len(ltd)==0:

18 continue

19 singleUniv=[]

20 for td in ltd:

21 singleUniv.append(td.string)

22 allUniv.append(singleUniv)

23 write_csv(allUniv)

24 def printUnivList (num):

25 print("{:^4}{:^10}{:^5}{:^8}{:^10}". format("排名",26 "学校名称", "省市","总分","培养规模"))

27 for i in range (num):

28 u=allUniv[i]

29 print("{:^4}{:^10}{:^5}{:^8}{:^10}".format(u[0],30 u[1],u[2],u[3],u[6]))

31 return u

32 def write_csv(list):

33 name = [‘排名‘, ‘学校名称‘, ‘省份‘, ‘总分‘, ‘生源质量(新生高考成绩得分)‘, ‘培养结果(毕业生就业率)‘, ‘社会声誉(社会捐赠收入·千元)‘, ‘科研规模(论文数量·篇)‘,34 ‘科研质量(论文质量·FWCI)‘, ‘顶尖成果(高被引论文·篇)‘, ‘顶尖人才(高被引学者·人)‘, ‘科技服务(企业科研经费·千元)‘, ‘成果转化(技术转让收入·千元)‘]

35 with open(‘C:/Users/86188/Desktop/新建文件夹/最好大学排名.csv‘, ‘w‘) as f:

36 writer = csv.writer(f)

37 writer.writerow(name)

38 for row in list:

39 writer.writerow(row)

40 def main (num) :

41 url = ‘http://www.zuihaodaxue.cn/zuihaodaxuepaiming2016.html‘

42 html = getHTMLText (url)

43 soup = BeautifulSoup(html, "html.parser")

44 fillUnivList(soup)

45 printUnivList(num)

46 print("排名情况如上所示")

47 main(310)

上一篇:快速排序

文章标题:Python第一条网络爬虫,爬取一个网页的内容

文章链接:http://soscw.com/index.php/essay/45735.html