爬取江苏省预算公开 文件下载 【JS页面爬虫】

2021-02-04 05:15

标签:pytho 预算 tde 江苏省 爬虫 agent epp byte def 获取json页面 帮人弄的,正好学了一下,如果爬取JS页面 爬取江苏省预算公开 文件下载 【JS页面爬虫】 标签:pytho 预算 tde 江苏省 爬虫 agent epp byte def 原文地址:https://www.cnblogs.com/douzujun/p/13149482.htmlimport re, requests, json, os, time

from io import BytesIO

headers = {

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36"

}

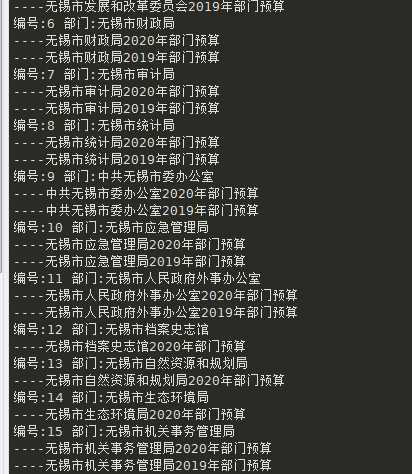

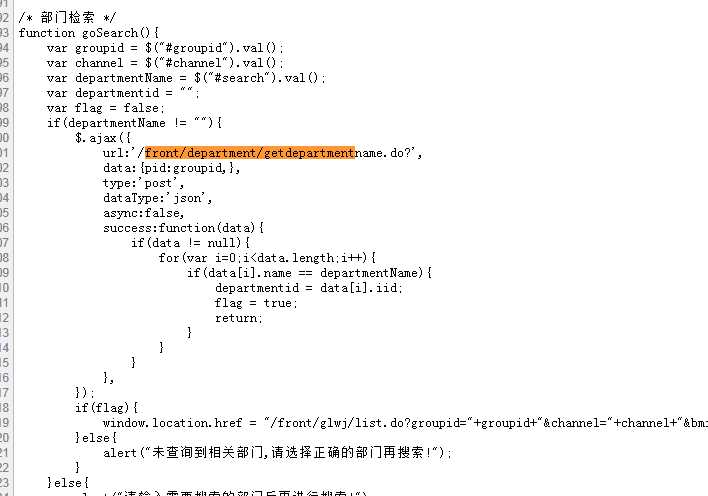

#获取所有部门

def getDepartment(pid , startNum):

url = "http://yjsgk.jsczt.cn/front/department/getdepartmentname.do?pid=" + str(pid)

res = requests.get(url, headers = headers)

res = res.content

json_res = json.loads(res)

for i in range(len(json_res)):

if i ", "\n")

file_content = file_content.replace(" ", " ")

file_content = re.sub("<.>","", file_content)

filepath = path + file_name + ".txt"

with open(filepath, "w")as f:

f.write(file_content)

else:

#有附件

json_res = json.loads(res)

for j_data in json_res:

file_iid = j_data["iid"]

file_name = j_data["file_oldname"]

filepath = path + file_name

#http://yjsgk.jsczt.cn/front/bmcontent/download.do?iid=4228

file_url = "http://yjsgk.jsczt.cn/front/bmcontent/download.do?iid=" + str(file_iid)

download(file_url, filepath)

#下载

def download(url, filepath):

res = requests.get(url, headers = headers)

data = res.content

with open(filepath, "wb") as f:

f.write(data)

time.sleep(1)#保命....

#创建目录

def createDir(path):

if not os.path.exists(path):

os.makedirs(path)

pid = 122

#编号,0为从头开始下载

startNum = 0

getDepartment(pid, startNum)

上一篇:问题:CondaHTTPError: HTTP 000 CONNECTION FAILED for url <https://mirrors.tuna.tsinghua.edu.cn/a

文章标题:爬取江苏省预算公开 文件下载 【JS页面爬虫】

文章链接:http://soscw.com/index.php/essay/50748.html