在kubernetes中搭建harbor,并利用MinIO对象存储保存镜像文件

2021-02-06 10:15

标签:after fas logic and hub pci err highlight png 前言:此文档是用来在线下环境harbor利用MinIO做镜像存储的,至于那些说OSS不香吗?或者单机harbor的,不用看了。此文档对你没啥用,如果是采用单机的harbor连接集群MinIO,请看我的另一篇博文。 环境: 应用版本: ## 一、nfs-client-provisioner ### 2、在其他服务器安装nfs-client ### 3、在k8s-master1上安装nfs-client-provisioner 实现动态持久存储,nfs-client-provisioner 是一个Kubernetes的简易NFS的外部provisioner,本身不提供NFS ### 稍等片刻,检查nfs-client-provisioner是否正常,出现下面的输出说明正常,如果不正常请检查上面的步骤,是否存在问题 ## 二、安装helm3 ## 三、安装nginx-controller-manager ## 四、安装MinIO ### 此处IP地址要与自己的机器地址对应或者采用域名,后缀是minio存储路径 ### 2、在k8s-master1安装mc命令,并创建bucket harbor ## 五、在k8s中安装harbor ### 1、先在k8s中创建harbor要使用的TLS证书的secret,证书如果没有可以let‘s encrypt申请 ### 2、克隆harbor-helm ### 3、修改harbor-helm/values.yaml,由于内容太多了,我只把需要修改的内容贴出来 ### secretName对应刚刚创建的secret名称,core为harbor访问域名 ### 下面是nfs持久化存储 ### 这往下最重要,regionendpoint地址可以写nginx代理的地址和端口,我这里只写了minio Server其中一台 ### 4、通过helm在k8s中安装harbor ### 5、最后稍等3、5分钟,查看harbor应用是否正常 ### 出现下面类似的输出,基本上说明harbor已经正常启动 ## 六、安装nginx 4层转发,否则无法通过nginx-ingress访问harbor ### 1、由于nginx-ingress默认是LoadBalancer模式,在线下环境无法正常使用。我们需要改为NodePort ### 修改.spec.type的值为NodePort,并保存 ### 2、查看nginx-ingress-controller的nodeport端口,记住80和443对应的端口 ### 3、安装nginx4层代理 ### 下面upstream中的端口一定要跟上面2步骤NodePort的相对应 ### 测试并启动nginx ## 如果此文档对你有所帮助,请不吝打赏 在kubernetes中搭建harbor,并利用MinIO对象存储保存镜像文件 标签:after fas logic and hub pci err highlight png 原文地址:https://www.cnblogs.com/guoyishen/p/13113501.html

helm v3.2.3

kubernetes 1.14.3

nginx-ingress 1.39.1

harbor 2.0

nginx 1.15.3

MinIO RELEASE.2020-05-08T02-40-49Z

### 这里就不讲解kubernetes集群怎么搭建了。我们kubernetes共享存储为了简单,采用的是nfs。我们先讲解一下怎么采用nfs做k8s持久存储。

### 注意执行主机,除了nfs-server是在94那台服务器执行了相关命令,其他的大部分是在master1上面执行

### 1、在nfs-server安装nfs服务yum -y install nfs-utils rpcbind

mkdir /nfs/data

chmod 777 /nfs/data

echo ‘/nfs/data *(rw,no_root_squash,sync)‘ > /etc/exports

exportfs -r

systemctl restart rpcbind && systemctl enable rpcbind

systemctl restart nfs && systemctl enable nfs

rpcinfo -p localhost

showmount -e 10.0.0.94

yum install -y nfs-utils

cd /usr/local/src && mkdir nfs-client-provisioner && cd nfs-client-provisioner

### 注意deployment.yaml文件中,IP对应的是nfs-server的,PATH路径对应的是nfs-server的/etc/exports的路径cat > deployment.yaml

cat > rbac.yaml

cat > StorageClass.yaml

kubectl apply -f deployment.yaml

kubectl apply -f rbac.yaml

kubectl apply -f StorageClass.yaml

kubectl get pods -n kube-system | grep nfs

nfs-client-provisioner-7778496f89-kthnj 1/1 Running 0 169m

cd /usr/local/src &&wget https://get.helm.sh/helm-v3.2.3-linux-amd64.tar.gz &&\

tar xf helm-v3.2.3-linux-amd64.tar.gz &&cp linux-amd64/helm /usr/bin/ &&helm version

helm repo add stable http://mirror.azure.cn/kubernetes/charts

helm pull stable/nginx-ingress &&docker pull fungitive/defaultbackend-amd64 &&docker tag fungitive/defaultbackend-amd64 k8s.gcr.io/defaultbackend-amd64:1.5 &&helm template guoys nginx-ingress-*.tgz | kubectl apply -f -

### 1、在准备的4台服务器安装minio Server,官方建议是准备最低4台服务器,并且是单独的磁盘空间存放minio数据cd /usr/local/src &&wget https://dl.min.io/server/minio/release/linux-amd64/minio &&\

chmod +x minio && cp minio /usr/bin

cat > /etc/systemd/system/minio.service EOF

[Unit]

Description=Minio

Documentation=https://docs.minio.io

Wants=network-online.target

After=network-online.target

AssertFileIsExecutable=/usr/bin/minio

[Service]

EnvironmentFile=-/etc/minio/minio.conf

ExecStart=/usr/bin/minio server $ENDPOINTS

# Let systemd restart this service always

Restart=always

# Specifies the maximum file descriptor number that can be opened by this process

LimitNOFILE=65536

# Disable timeout logic and wait until process is stopped

TimeoutStopSec=infinity

SendSIGKILL=no

[Install]

WantedBy=multi-user.target

EOF

mkdir -p /etc/minio

cat > /etc/minio/minio.conf EOF

MINIO_ACCESS_KEY=guoxy

MINIO_SECRET_KEY=guoxy321export

ENDPOINTS="http://10.0.0.91/minio http://10.0.0.92/minio http://10.0.0.93/minio http://10.0.0.94/minio"

EOF

systemctl daemon-reload && systemctl start minio && systemctl enable minio

cd /usr/local/src && wget https://dl.min.io/client/mc/release/linux-amd64/mc && \

chmod +x mc && cp mc /usr/bin/ && mc config host add minio "http://10.0.0.91:9000 http://10.0.0.92:9000 http://10.0.0.93:9000/ http://10.0.0.94:9000" guoxy guoxy321export && mc mb minio/harbor

kubectl create secret tls guofire.xyz --key privkey.pem --cert fullchain.pem

cd /usr/local/src && git clone -b 1.4.0 https://github.com/goharbor/harbor-helm

vim harbor-helm/values.yaml

secretName: "guofire.xyz"

core: harbor.guofire.xyz

notary: notary.guofire.xyz

externalURL: https://harbor.guofire.xyz persistentVolumeClaim:

registry:

storageClass: "managed-nfs-storage"

subPath: "registry"

storageClass: "managed-nfs-storage"

subPath: "chartmuseum"

storageClass: "managed-nfs-storage"

subPath: "jobservice"

storageClass: "managed-nfs-storage"

subPath: "database"

storageClass: "managed-nfs-storage"

subPath: "redis"

storageClass: "managed-nfs-storage"

subPath: "trivy"

imageChartStorage:

disableredirect: true

type: s3

filesystem:

rootdirectory: /storage

#maxthreads: 100

s3:

region: us-west-1

bucket: harbor

accesskey: guoys!

secretkey: guoys321export

regionendpoint: http://10.0.0.92:9000

encrypt: false

secure: false

v4auth: true

chunksize: "5242880"

rootdirectory: /

redirect:

disabled: false

maintenance:

uploadpurging:

enabled: false

delete:

enabled: true

helm install harbor harbor-helm/

kubectl get pods

NAME READY UP-TO-DATE AVAILABLE AGE

harbor-harbor-chartmuseum 1/1 1 1 13h

harbor-harbor-clair 1/1 1 1 13h

harbor-harbor-core 1/1 1 1 13h

harbor-harbor-jobservice 1/1 1 1 13h

harbor-harbor-notary-server 1/1 1 1 13h

harbor-harbor-notary-signer 1/1 1 1 13h

harbor-harbor-portal 1/1 1 1 13h

harbor-harbor-registry 1/1 1 1 13h

zy-nginx-ingress-controller 1/1 1 1 32h

zy-nginx-ingress-default-backend 1/1 1 1 32h

kubectl edit svc guoys-nginx-ingress-controller

kubectl get svc | grep ‘ingress-controller‘

guoys-nginx-ingress-controller NodePort 10.200.248.214

yum install -y gcc make

mkdir /apps

cd /usr/local/src/

wget http://nginx.org/download/nginx-1.15.3.tar.gz

tar xf nginx-1.15.3.tar.gz

cd nginx-1.15.3

./configure --with-stream --without-http --prefix=/apps/nginx --without-http_uwsgi_module --without-http_scgi_module --without-http_fastcgi_module

make && make install

cat > /apps/nginx/conf/nginx.conf EOF

worker_processes 1;

events {

worker_connections 1024;

}

stream {

log_format tcp ‘$remote_addr [$time_local] ‘

‘$protocol $status $bytes_sent $bytes_received ‘

‘$session_time "$upstream_addr" ‘

‘"$upstream_bytes_sent" "$upstream_bytes_received" "$upstream_connect_time"‘;

upstream https_default_backend {

server 10.0.0.91:30071;

server 10.0.0.92:30071;

server 10.0.0.93:30071;

}

upstream http_backend {

server 10.0.0.91:32492;

server 10.0.0.92:32492;

server 10.0.0.93:32492;

}

server {

listen 443;

proxy_pass https_default_backend;

access_log logs/access.log tcp;

error_log logs/error.log;

}

server {

listen 80;

proxy_pass http_backend;

}

}

EOF

/apps/nginx/sbin/nginx -t

/apps/nginx/sbin/nginx

echo ‘/apps/nginx/sbin/nginx‘ >> /etc/rc.local

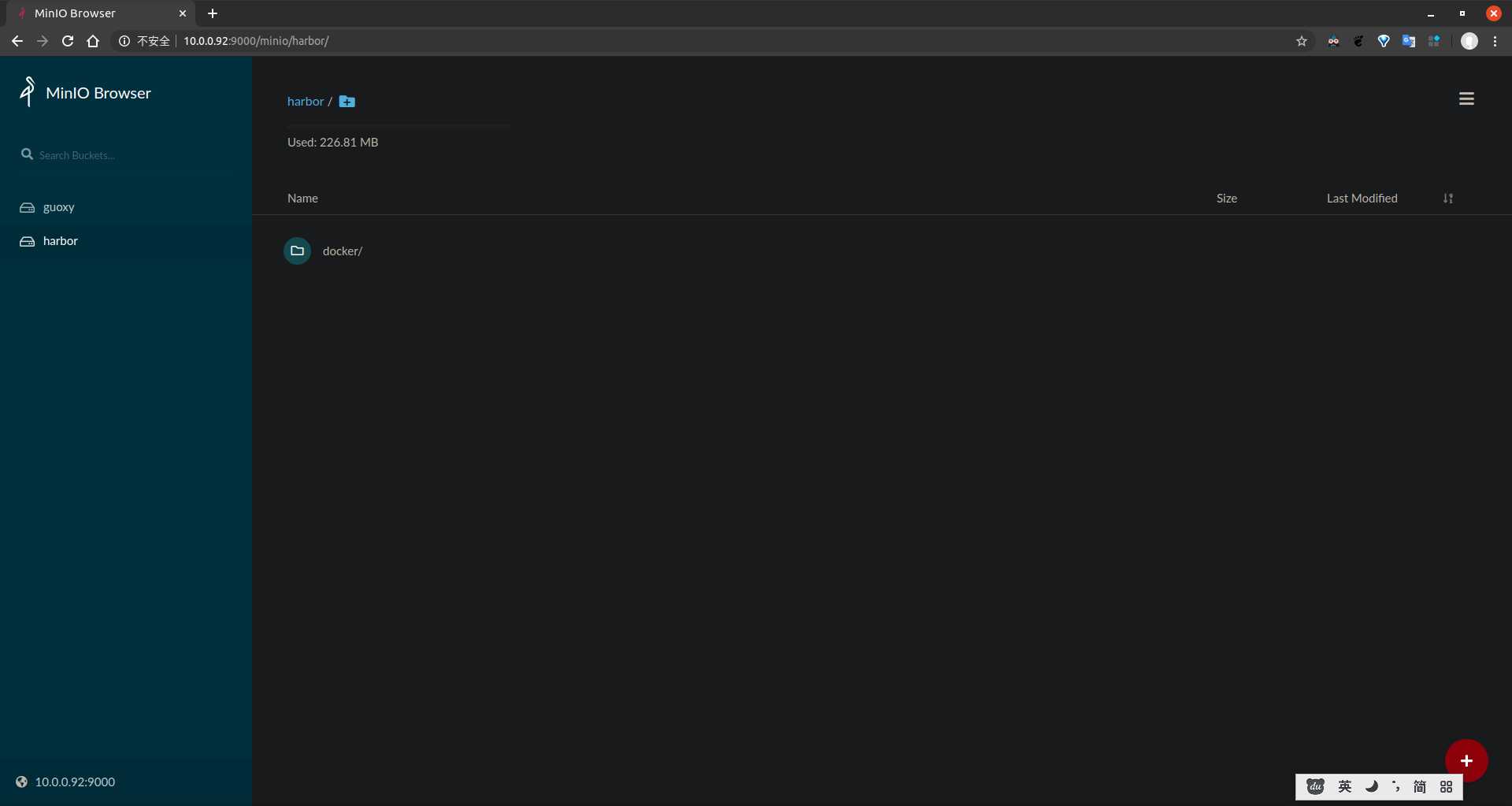

## 七、最后进行测试,推送镜像到harbor。成功后查看minio的harbor bucket是否存在docker目录。如果存在说明成

上一篇:js动态添加div

下一篇:小tips:HTML的实体

文章标题:在kubernetes中搭建harbor,并利用MinIO对象存储保存镜像文件

文章链接:http://soscw.com/index.php/essay/51695.html