kubernetes高可用集群安装(二进制安装、v1.20.2版)

2021-03-01 17:28

标签:模块 _for route load hosts fstab ddr mes 内核参数 1.kubernetes的五个组件 3.1.1 修改主机名 3.1.2 配置hosts文件 3.1.3 关闭防火墙和selinux 3.1.4 关闭交换分区 3.1.5 时间同步 3.1.6 修改内核参数 3.1.7 加载ipvs模块 3.2 配置工作目录 将秘钥分发到另外五台机器,让 master1 可以免密码登录其他机器 3.3 搭建etcd集群 3.3.2 创建etcd证书 工具配置 配置ca请求文件 注: 创建ca证书 配置ca证书策略 配置etcd请求csr文件 生成证书 3.3.3 部署etcd集群 创建配置文件 注: 创建启动服务文件 同步相关文件到各个节点 注:km2和kn分别修改配置文件中etcd名字和ip,并创建目录 /var/lib/etcd/default.etcd 启动etcd集群 注:同时启动三个节点 查看集群状态 3.4 kubernetes组件部署 3.4.2 创建工作目录 3.4.3 部署api-server 注: 生成证书和token文件 创建配置文件 注: 创建服务启动文件 同步相关文件到各个节点 注:km1\km2配置文件的IP地址修改为实际的本机IP 启动服务 3.4.4 部署四层反向代理 kubernetes高可用集群安装(二进制安装、v1.20.2版) 标签:模块 _for route load hosts fstab ddr mes 内核参数 原文地址:https://www.cnblogs.com/zhugq02/p/14401161.html

master节点的三个组件

kube-apiserver

整个集群的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制。

kube-controller-manager

控制器管理器

负责维护集群的状态,比如故障检测、自动扩展、滚动更新等。保证资源到达期望值。

kube-scheduler

调度器

经过策略调度POD到合适的节点上面运行。分别有预选策略和优选策略。

node节点的两个组件

kubelet

在集群节点上运行的代理,kubelet会通过各种机制来确保容器处于运行状态且健康。kubelet不会管理不是由kubernetes创建的容器。kubelet接收POD的期望状态(副本数、镜像、网络等),并调用容器运行环境来实现预期状态。

kubelet会定时汇报节点的状态给apiserver,作为scheduler调度的基础。kubelet会对镜像和容器进行清理,避免不必要的文件资源占用。

kube-proxy

kube-proxy是集群中节点上运行的网络代理,是实现service资源功能组件之一。kube-proxy建立了POD网络和集群网络之间的关系。不同node上的service流量转发规则会通过kube-proxy来调用apiserver访问etcd进行规则更新。

service流量调度方式有三种方式:userspace(废弃,性能很差)、iptables(性能差,复杂,即将废弃)、ipvs(性能好,转发方式清晰)。

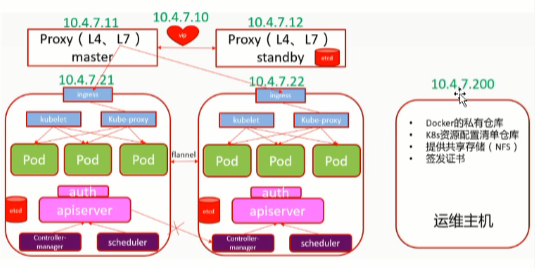

2.集群架构

3. 搭建集群

3.1 机器基本配置

以下配置在6台机器上面操作

修改主机名称:km1、km2\node1、node2

修改机器的/etc/hosts文件

cat >> /etc/hosts

10.252.4.11 km1

10.252.4.12 km2

10.252.4.14 kn1

10.252.4.15 kn2

EOF

systemctl stop firewalld

setenforce 0

sed -i ‘s/^SELINUX=.*/SELINUX=disabled/‘ /etc/selinux/config

swapoff -a

永久关闭,修改/etc/fstab,注释掉swap一行

yum install -y chrony

systemctl start chronyd

systemctl enable chronyd

chronyc sourcescat > /etc/sysctl.d/k8s.conf modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

lsmod | grep ip_vs

lsmod | grep nf_conntrack_ipv4

yum install -y ipvsadm

每台机器都需要配置证书文件、组件的配置文件、组件的服务启动文件,现专门选择 km1 来统一生成这些文件,然后再分发到其他机器。以下操作在 km1 上进行[root@km1 ~]# mkdir -p /data/work

注:该目录为配置文件和证书文件生成目录,后面的所有文件生成相关操作均在此目录下进行

[root@km1 ~]# ssh-keygen -t rsa -b 2048

[root@km1 ~]# ssh-copy-id -i .ssh/id_rsa.pub km2

3.3.1 配置etcd工作目录[root@km1 ~]# mkdir -p /etc/etcd # 配置文件存放目录

[root@km1 ~]# mkdir -p /etc/etcd/ssl # 证书文件存放目录

工具下载

[root@km1 ~]# cd /data/work/

[root@km1 work]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@km1 work]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@km1 work]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd

[root@km1 work]# chmod +x cfssl*

[root@km1 work]# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[root@km1 work]# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@km1 work]# mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

[root@km1 work]# cat ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "beijing",

"O": "k8s",

"OU": "system"

}

],

"ca": {

"expiry": "175200h"

}

}

CN:Common Name,kube-apiserver 从证书中提取该字段作为请求的用户名 (User Name);浏览器使用该字段验证网站是否合法;

O:Organization,kube-apiserver 从证书中提取该字段作为请求用户所属的组 (Group)[root@km1 work]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

[root@km1 work]# cat ca-config.json

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "175200h"

}

}

}

}

[root@km1 work]# vim etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"10.252.4.11",

"10.252.4.12",

"10.252.4.13"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "k8s",

"OU": "system"

}]

}

[root@km1 work]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

[root@km1 work]# ls etcd*.pem

[root@km1 work]# wget https://github.com/etcd-io/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

[root@km1 work]# tar -xf etcd-v3.4.13-linux-amd64.tar.gz

[root@km1 work]# cp -p etcd-v3.4.13-linux-amd64/etcd* /usr/local/bin/

[root@km1 work]# rsync -vaz etcd-v3.4.13-linux-amd64/etcd* kn2:/usr/local/bin/

[root@km1 work]# vim etcd.conf

#[Member]

ETCD_NAME="etcd1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://10.252.4.11:2380"

ETCD_LISTEN_CLIENT_URLS="https://10.252.4.11:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.252.4.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://10.252.4.11:2379"

ETCD_INITIAL_CLUSTER="etcd1=https://10.252.4.11:2380,etcd2=https://10.252.4.12:2380,etcd3=https://10.252.4.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:集群Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群

方式一:

有配置文件的启动[root@km1 work]# vim etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/etc/etcd/etcd.conf

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd --cert-file=/etc/etcd/ssl/etcd.pem --key-file=/etc/etcd/ssl/etcd-key.pem --trusted-ca-file=/etc/etcd/ssl/ca.pem --peer-cert-file=/etc/etcd/ssl/etcd.pem --peer-key-file=/etc/etcd/ssl/etcd-key.pem --peer-trusted-ca-file=/etc/etcd/ssl/ca.pem --peer-client-cert-auth --client-cert-auth

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@km1 work]# cp ca*.pem etcd*.pem /etc/etcd/ssl/

[root@km1 work]# cp etcd.conf /etc/etcd/

[root@km1 work]# cp etcd.service /usr/lib/systemd/system/

[root@km1 work]# scp ca*.pem etcd*.pem km2:/etc/etcd/ssl/

[root@km1 work]# scp etcd.conf km2:/etc/etcd/

[root@km1 work]# scp etcd.service km2:/usr/lib/systemd/system/

[root@km1 work]# scp ca*.pem etcd*.pem kn:/etc/etcd/ssl/

[root@km1 work]# scp etcd.conf kn:/etc/etcd/

[root@km1 work]# scp etcd.service kn:/usr/lib/systemd/system/

[root@km1 work]# mkdir -p /var/lib/etcd/default.etcd

[root@km1 work]# systemctl daemon-reload

[root@km1 work]# systemctl start etcd.service

[root@km1 work]# systemctl status etcd

etcdctl member list

3.4.1 下载安装包[root@km1 work]# wget https://dl.k8s.io/v1.20.1/kubernetes-server-linux-amd64.tar.gz

[root@km1 work]# tar -xf kubernetes-server-linux-amd64.tar

[root@km1 work]# cd kubernetes/server/bin/

[root@km1 bin]# cp kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

[root@km1 bin]# scp kube-apiserver kube-controller-manager kube-scheduler kubectl km2:/usr/local/bin/

[root@km1 bin]# scp kubelet kube-proxy kn:/usr/local/bin/

[root@km1 bin]# cd /data/work/

[root@km1 work]# mkdir -p /etc/kubernetes/ # kubernetes组件配置文件存放目录

[root@km1 work]# mkdir -p /etc/kubernetes/ssl # kubernetes组件证书文件存放目录

[root@km1 work]# mkdir /var/log/kubernetes # kubernetes组件日志文件存放目录

创建csr请求文件[root@km1 work]# vim kube-apiserver-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"10.252.4.11",

"10.252.4.12",

"10.252.4.13",

"10.252.4.10",

"10.255.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "k8s",

"OU": "system"

}

]

}

如果 hosts 字段不为空则需要指定授权使用该证书的 IP 或域名列表。

由于该证书后续被 kubernetes master 集群使用,需要将master节点的IP都填上,同时还需要填写 service 网络的首个IP。(一般是 kube-apiserver 指定的 service-cluster-ip-range 网段的第一个IP,如 10.255.0.1)[root@km1 work]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

[root@km1 work]# cat > token.csv $(head -c 16 /dev/urandom | od -An -t x | tr -d ‘ ‘),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

> EOF

[root@km1 work]# vim kube-apiserver.conf

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota --anonymous-auth=false --bind-address=10.252.4.11 --secure-port=6443 --advertise-address=10.252.4.11 --insecure-port=0 --authorization-mode=Node,RBAC --runtime-config=api/all=true --enable-bootstrap-token-auth --service-cluster-ip-range=10.255.0.0/16 --token-auth-file=/etc/kubernetes/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem --tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem --client-ca-file=/etc/kubernetes/ssl/ca.pem --kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem --kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem --service-account-key-file=/etc/kubernetes/ssl/ca-key.pem --service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \ # 1.20以上版本必须有此参数

--service-account-issuer=https://kubernetes.default.svc.cluster.local \ # 1.20以上版本必须有此参数

--etcd-cafile=/etc/etcd/ssl/ca.pem --etcd-certfile=/etc/etcd/ssl/etcd.pem --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem --etcd-servers=https://10.252.4.11:2379,https://10.252.4.12:2379,https://10.252.4.13:2379 --enable-swagger-ui=true --allow-privileged=true --apiserver-count=2 --audit-log-maxage=30 --audit-log-maxbackup=3 --audit-log-maxsize=100 --audit-log-path=/var/log/kube-apiserver-audit.log --event-ttl=1h --alsologtostderr=true --logtostderr=false --log-dir=/var/log/kubernetes --v=4"

--logtostderr:启用日志

--v:日志等级

--log-dir:日志目录

--etcd-servers:etcd集群地址

--bind-address:监听地址

--secure-port:https安全端口

--advertise-address:集群通告地址

--allow-privileged:启用授权

--service-cluster-ip-range:Service虚拟IP地址段

--enable-admission-plugins:准入控制模块

--authorization-mode:认证授权,启用RBAC授权和节点自管理

--enable-bootstrap-token-auth:启用TLS bootstrap机制

--token-auth-file:bootstrap token文件

--service-node-port-range:Service nodeport类型默认分配端口范围

--kubelet-client-xxx:apiserver访问kubelet客户端证书

--tls-xxx-file:apiserver https证书

--etcd-xxxfile:连接Etcd集群证书

--audit-log-xxx:审计日志[root@km1 work]# vim kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@km1 work]# cp ca*.pem kube-apiserver*.pem /etc/kubernetes/ssl/

[root@km1 work]# cp token.csv kube-apiserver.conf /etc/kubernetes/

[root@km1 work]# cp kube-apiserver.service /usr/lib/systemd/system/

[root@km1 work]# scp ca*.pem kube-apiserver*.pem km2:/etc/kubernetes/ssl/

[root@km1 work]# scp token.csv kube-apiserver.conf km2:/etc/kubernetes/

[root@km1 work]# scp kube-apiserver.service km2:/usr/lib/systemd/system/

[root@km1 work]# systemctl daemon-reload

[root@km1 work]# systemctl start kube-apiserver

[root@km1 work]# systemctl status kube-apiserver

[root@km1 work]# systemctl enable kube-apiserver

[root@km1 work]# netstat -nltup|grep kube-api

分别在km节点安装NGINX和keepalived[root@km1 work]# yum install nginx keepalived -y

[root@km1 work]# vi /etc/nginx/nginx.conf

stream {

upstream kube-apiserver {

server 10.252.4.11:6443 max_fails=3 fail_timeout=30s;

server 10.252.4.12:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443;

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

}

}

[root@km1 work]# nginx -t

检查脚本`vi /etc/keepalived/check_port.sh`

#!/bin/bash

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

[root@km1 work]# chmod +x /etc/keepalived/check_port.sh

##########

配置文件

keepalived 主

[root@km1 work]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 10.252.4.11

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 251

priority 100

advert_int 1

mcast_src_ip 10.252.4.11

nopreempt

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

10.252.4.10

}

}

keepalived 从:

! Configuration File for keepalived

global_defs {

router_id 10.252.4.12

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 251

mcast_src_ip 10.252.4.12

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

10.252.4.10

}

}

nopreempt:非抢占式

启动代理并检查

systemctl start nginx keepalived

systemctl enable nginx keepalived

netstat -lntup|grep nginx

ip add

上一篇:js 数据类型相关?

文章标题:kubernetes高可用集群安装(二进制安装、v1.20.2版)

文章链接:http://soscw.com/index.php/essay/58657.html