using wcf with dotnetcore

2021-03-04 14:27

Recently I was going through the motions upgrading an ASP.NET Core 2.0 website to 2.2. Overall the process was fairly straightforward, minus some gotchas. We were attempting to switch the website from targeting the full framework (net47) to target netcoreapp2.2 but that caused a cascade of problems. One such problem was WCF. Today we’ll discuss using WCF with .NET Core and some of the gotchas you may run into.

Code from today’s post can be located on my GitHub.

From whence we came

Windows Communication Foundation (WCF) is a .NET Framework 3.5 introduced in 2007. It expanded greatly on ASMX services from the older .NET Framework versions and is meant to replace them. Some of the power introduced with WCF is it’s ability to be hosted anywhere (console application, Windows Service, etc) where ASMX has to run inside of a web application.

WCF is a massive framework/toolset in and of itself and the learning curve can be steep. It is a powerful framework, however, so learning that curve is worth it

Using the WCF Web Service Reference

As with most things Microsoft, there is an easy way and a hard way. We’re going to start off with the easy way. Look no farther than their own documentation. This is going to generate all the proxy classes and “configuration” for you. I put “configuration” in quotations on purpose.

Running svcutil (or the Visual Studio equivalent via Add Service Reference) will generate default configuration so the client can load based on configuration. To make a really long story short this ultimately is how it pools the channel connections so it doesn’t have to continually open and close connections and have awful performance. I don’t want to spill the beans yet but trust that we’ll come back to this.

When you add a Connected Service for netcore using the tools it will mimic configuration. Read that again. It will mimic configuration. Go ahead, do it, pull open the Reference.cs file and tell me where you see it actually loading based on configuration. Oh, you can’t find it? Yeah, me either. You know why? It is heavily married to the XML based configuration and system.serviceModel.

Here is what a configuration *might* look like if you generated a service reference in a full framework application:

Thing is… adding the service reference this way doesn’t give you much leeway if you have multiple environments like we did in our migration. We have five test environments, one “staging” or “training” environment, and then live. We needed a way to have a configuration based deploy. Let’s look at that next.

Using ClientBase

Anybody whose been around the track a couple times with WCF will hopefully know about ClientBase. Automatically generated service references use this under the hood. Visual Studio hides this away from you a bit in full framework since it hides the files. However, the netcore version has Reference.cs right there for you to look at. Either way, it’s available in the filesystem on both.

ClientBase has a lot of magic under the hood. I previously mentioned that it will handle connection pooling on the channel. If you dig into ClientBase with the configuration-based pathway you’ll see that it caches ChannelFactory. That’s only half of it. ChannelFactory also has some caching/pooling. I invite you to Read more about that here. If you do not go the configuration-based route then it does not cache your ChannelFactory and you lose connection pooling. Say that with me again.

Let’s sidestep that issue for a minute because honestly it didn’t come up for me until I was well into a pretty sweet path for “configuration-based” setup on our WCF clients in netcore. Man, was I in for a major letdown late into the party.

ClientBase

For today’s demo I’m going to assume I have a service called IEmployeeService hosted from a full framework application. I place all my models and contracts in a netstandard shared library. I have another netstandard library that has the client proxies and some “proxy factories” that I came up with while trying to figure out how to get this all working.

[ServiceContract]

public interface IEmployeeService

{

[OperationContract]

EmployeeResponse Get(EmployeeRequest request);

[OperationContract]

Employee UpdateEmployee(Employee employee);

[OperationContract]

DeleteEmployeeResponse Delete(DeleteEmployeeRequest request);

}Let me take a quick aside. My particular situation has a lot of legacy code that is tightly coupled to these proxies. I really didn’t want to reinvent the wheel or break things out at this time so it seemed “easier” to have these ServiceFacades (as we called them) use an IClientProxyFactory I made up instead of a direct client reference. Hopefully as I continue this will make sense. Suffice it to say that if you *don’t* have that problem then you might not have gone the same direction I did. I did have that problem. So I did.

Anyway, so I’m going to create my own client proxy called EmployeeClient and have it inherit ClientBase. I’m also going to have it implement IEmployeeService so all the contracts match exactly.

public class EmployeeClient : ClientBase, IEmployeeService

{

public EmployeeClient()

: base() { }

public DeleteEmployeeResponse Delete(DeleteEmployeeRequest request)

{

return Channel.Delete(request);

}

public EmployeeResponse Get(EmployeeRequest request)

{

return Channel.Get(request);

}

public Employee UpdateEmployee(Employee employee)

{

return Channel.UpdateEmployee(employee);

}

} Assuming I was in a full framework library with the app.config stuff posted above, this would now work. I’m not though, right? That’s why you’re here.

Running this in a netcore application that targets netcore will throw an exception because it cannot load the configuration. Running in a netcore application that targets full framework and has an app.config will work because it can load the configuration.

Adding our own flavor of configuration

I got some initial inspiration from this StackOverflow question. Taking his basis and then extending on it, I gotta admit, I think it was pretty genius. I decided I would mimic system.serviceModel inside my appsettings.json and even set up the ability to have default configuration values for a fallback. We had something like 12 services and I wanted this to be “easy” to configure.

In my appsettings.json, then, I added a “Services” entry which consisted of two trees. “BasicHttp” and “NetTcp”. Now clearly this could have more since there are multiple other types of bindings. In my case, these are the two I use. Within each tree then comes the service configurations themselves as well as a “Default” entry. Here’s an example:

"Services": {

"BasicHttp": {

"TestFileService": {

"address": "http://localhost:9104/TestFileService/"

},

"DefaultFileService": {

"transferMode": "Streamed"

}

},

"NetTcp": {

"EmployeeService": {

"address": "net.tcp://localhost:9118/EmployeeService/",

"maxItemsInObjectGraph": 750000

},

"Default": {

"dnsIdentity": "localhost",

"closeTimeout": "00:10:00",

"openTimeout": "00:10:00",

"receiveTimeout": "00:10:00",

"sendTimeout": "00:10:00",

"maxBufferSize": 20524888,

"maxReceivedMessageSize": 20524888,

"maxDepth": 150,

"maxStringContentLength": 20400320,

"maxArrayLength": 20524888,

"maxBytesPerRead": 16384,

"maxNameTableCharCount": 524888,

"securityMode": "None"

}

}

}I set up some models to load those configuration values and got them into my injection container. Next I needed a way to have something load the appropriate configuration and give me the values, whether a specific override or the default (if any). I decided to set up some interfaces called IClientProxySettings and IBasicHttpClientProxySettings. These would ultimately be what is injected into a IClientProxyFactory which I previously mentioned.

Ultimately the premise is that if our IClientProxySettings is disabled then we will fall-back to configuration-based loading of the WCF client. For that I created a concrete for use in my full framework projects called DefaultClientProxySettings which has the Enabled property set to false. The magic for my netcore applications, however, was in the ClientProxySettings and BasicHttpProxySettings concretes I created.

ClientProxySettings

Let’s look at ClientProxySettings. It’s constructor is going to take a “key” and IOptionsSnapshot which is what loads our settings from the appsettings.json file. Overall it is pretty straightforward. If the specific configuration has a value, return it, otherwise return the default.

public class ClientProxySettings : IClientProxySettings

{

private ServiceSettings _coreSettings;

private ServiceSettings _defaultSettings;

public ClientProxySettings(string configurationKey, Microsoft.Extensions.Options.IOptionsSnapshot settingsSnapshot)

{

CoreSettings = settingsSnapshot.Get(configurationKey);

// gets the default value

DefaultSettings = settingsSnapshot.Value;

}

public bool Enabled { get => true; }

public string Address { get => CoreSettings.Address /*Settings.Address*/; }

public string DnsIdentity { get => Logic.StringTool.SelectStringValue(CoreSettings.DnsIdentity, DefaultSettings.DnsIdentity); }

public TimeSpan? CloseTimeout { get => CoreSettings.CloseTimeout ?? DefaultSettings.CloseTimeout; }

public TimeSpan? OpenTimeout { get => CoreSettings.OpenTimeout ?? DefaultSettings.OpenTimeout; }

public TimeSpan? ReceiveTimeout { get => CoreSettings.ReceiveTimeout ?? DefaultSettings.ReceiveTimeout; }

public TimeSpan? SendTimeout { get => CoreSettings.SendTimeout ?? DefaultSettings.SendTimeout; }

public long? MaxBufferPoolSize { get => CoreSettings.MaxBufferPoolSize ?? DefaultSettings.MaxBufferPoolSize; }

public int? MaxBufferSize { get => CoreSettings.MaxBufferSize ?? DefaultSettings.MaxBufferSize; }

public int? MaxItemsInObjectGraph { get => CoreSettings.MaxItemsInObjectGraph ?? DefaultSettings.MaxItemsInObjectGraph; }

public long? MaxReceivedMessageSize { get => CoreSettings.MaxReceivedMessageSize ?? DefaultSettings.MaxReceivedMessageSize; }

public int? MaxArrayLength { get => CoreSettings.MaxArrayLength ?? DefaultSettings.MaxArrayLength; }

public int? MaxBytesPerRead { get => CoreSettings.MaxBytesPerRead ?? DefaultSettings.MaxBytesPerRead; }

public int? MaxDepth { get => CoreSettings.MaxDepth ?? DefaultSettings.MaxDepth; }

public int? MaxNameTableCharCount { get => CoreSettings.MaxNameTableCharCount ?? DefaultSettings.MaxNameTableCharCount; }

public int? MaxStringContentLength { get => CoreSettings.MaxStringContentLength ?? DefaultSettings.MaxStringContentLength; }

public string SecurityMode { get => Logic.StringTool.SelectStringValue(CoreSettings.SecurityMode, DefaultSettings.SecurityMode); }

public TransferMode? TransferMode { get => CoreSettings.TransferMode ?? DefaultSettings.TransferMode; }

protected ServiceSettings DefaultSettings { get => _defaultSettings; set => _defaultSettings = value; }

protected ServiceSettings CoreSettings { get => _coreSettings; set => _coreSettings = value; }

} How did I register that into my DI container though? Some creative usage of named registrations, that’s how. I’d say look at the demo code for that.

We’ve gone over the basics of how I set up all the configuration to get here, how about we look at this IClientProxyFactory I keep mentioning.

IClientProxyFactory

For our demo I’m calling it IEmployeeClientProxyFactory and that is ultimately what we’ll inject anywhere we want to get access to the WCF client proxy. This makes it so we can share code between full framework and netcore projects as necessary. Overall the factory is pretty basic. What I’m showing you and what my project has are not quite the same thing. This post contains an aggregate of the things I tried before I made the discoveries about the lack of pooling and caching. But let’s pretend for a second I didn’t say that.

My interface has one single method on it. Get. It will return to me a client. Given my particular demo circumstances (one where I’m creating my own channel factory) I had to create an interface instead of returning the EmployeeClient directly. I called that IEmployeeClient.

Overall the way this ProxyFactory works is that I can request a client based on the enum. Possible values are Default (or null), ChannelFactory, or ManualBindings. My full framework client can pass null to get the default client based on configuration. If I try to do that in a netcore application it’ll throw an exception and the demo has one instance that demonstrates that.

I’ve built some extension methods that convert an IClientProxySettings into a System.ServiceModel.Channels.Binding instance as well as as into an EndpointAddress. If you refer back to the WCF generated client for netcore in Reference.cs you’ll see that they are manually building those as well using all default values minus a hard-coded address for the service itself. Again, I went an alternate route because I need this to be configurable for our many test/staging sites.

Demo

I decided the best way to demonstrate how I’m using WCF with .NET Core is by creating two demo applications. One is a full framework client and the other is a netcore client. The full framework one runs a single demo whilst the netcore one runs four demos.

These demos are in no way conclusive to demonstrate performance issues which I previously alluded to and will talk about more in a moment. They are simple and will only demonstrate *how* to get the WCF client working in netcore.

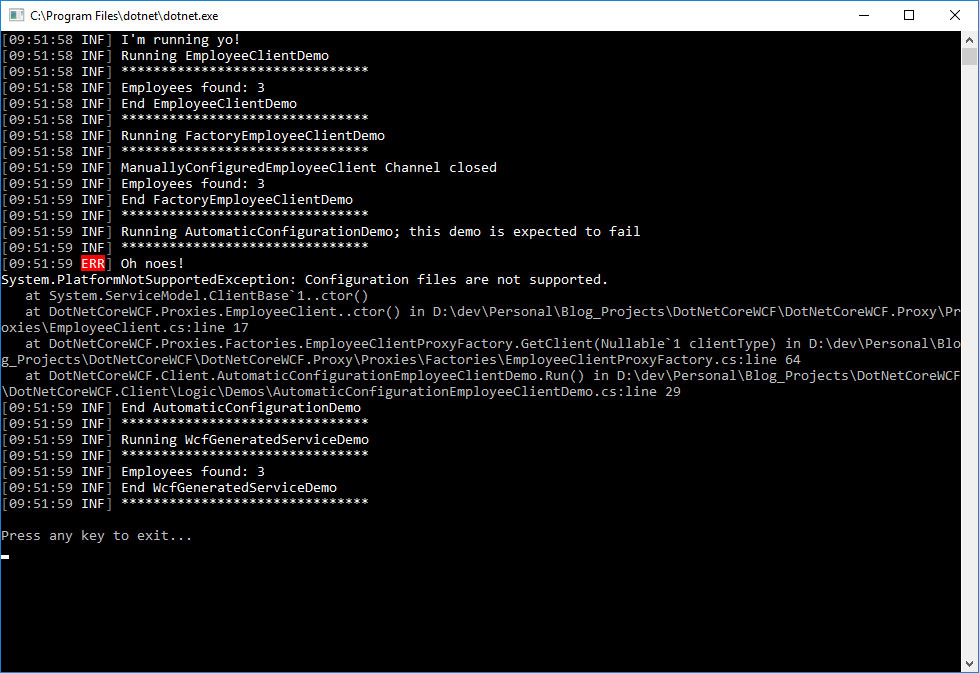

In the screenshot below you’ll see it runs four different demos in the application. The first one uses manual bindings where I pass the binding and service address into clientbase. The second is where I build and provide a ChannelFactory into the client. The third is attempting to use automatic configuration which doesn’t work in netcore. The fourth is using the service reference built using the tools in Visual Studio.

My Findings

I found in my own implementation that performance started to degrade once I was hitting 50+ simultaneous connections on our test server using K6. I was running all the same tests I’d previously generated when we were first deploying the site and running into some bottlenecks (read about it on my post about Throttling Requests in .NET Core web applications in the “history” section). Each of these simultaneous requests were hitting multiple services multiple times to load a plethora of information.

Anyway, my previous tests on the homepage average 1600+ requests successfully served in under 10s (the average was 2.2s). This time around, however, I’m only getting ~500 requests served and some of them take as long as 20s to serve up.

I’m pretty flabberghasted. The upgrade to dotnetcore 2.2 is supposed to be faster, among other things. I tried running the site both in-process (new to Core 2.2) and out-of-process which has been the only way up to this point. My results weren’t different enough to conclusively say it had anything to do with the hosting model.

Our goal

Let’s segue a brief moment here and point out that the major reason we wanted to perform the upgrade to netcore22. Aside from the fact the older version is no longer supported, we also want to get some performance gains by going in-proc. The fact that our results were not only *not* faster, but were instead 1/3 as performant, were really a kick in the gut. I spent a lot of time and effort trying to get everything converted and working properly.

Tracking it down

I started digging. A lot. I threw some System.Diagnostics.Stopwatch instances around a bunch of different operations and ultimately tracked it down to my WCF client calls. It dawned on me that they probably weren’t pooling connections so after some massive Google-Fu I learned about how ClientBase works and learned that my particular approach wasn’t going to support the caching and pooling.

At a crossroads, I presented all my findings to the other senior on my team and we ultimately decided that while I *could* recreate all the caching and pooling in our own instance, we already had plans on migrating off of WCF into microservice architecture using RabbitMQ so we wouldn’t spend the time. For kicks and giggles, here is a list of links to the sourcecode for those that want to go down that route:

- ClientBase

- ServiceChannelFactory

- ChannelFactoryRefCache

- ConfigurationEndpointTrait

- ServiceEndpoint

Ultimately we decided we’d keep the app targeting full framework but as a netcore app. Hopefully we can get around to the RabbitMQ migration sooner than later so we can switch it over the rest of the way.

By the way, if you’ve read other posts here you might have seen the one about Throttling requests in .NET Core Web Applications? We had to introduce a throttler mechanism on the same project I’ve referred to in this post and it looks like reasons there were two-fold: first, one of those service calls was extremely chatty (and we ended up caching most of those results on the web application itself), but second it would most definitely point to the overall bottleneck of our WCF services in general.

Conclusion

I learned that while you *can* consume WCF services in a netcore application you need to be really careful about doing so if it is highly integral in your application. If it can be a performance bottleneck in high-usage scenarios then you’re probably better off running your core application in full framework mode. The tradeoff, of course, is that you lose certain aspects of the core framework by doing so and more importantly you lose future enhancements in that path.

**Update 10/5/2019** – With the recent release of .NET Core 3.0 you’ll be stuck on earlier versions if you want to continue using WCF with .NET Core. See Migrating WCF to gRPC using .NET Core for an alternative.

Demo code from today’s post can be located on my GitHub.

文章标题:using wcf with dotnetcore

文章链接:http://soscw.com/index.php/essay/60026.html