解决spark-submit的There is insufficient memory for the Java Runtime Environment to continue.(老顽固问题) fa

2021-03-05 01:28

标签:sed view format commit modify openjdk target 网上 rmi Q:第一次提交wordcount案例,OK,一切正常。再次提交,出现下述错误。完整错误粘贴如下: 1、按照网上说的 free-m 看一下 可以看到一共3757M,已经用了3467M,剩下可用的只有128M。这肯定不能运行了 2、 这里采用第二种方法 参考链接:https://blog.csdn.net/jingzi123456789/article/details/83545355 解决spark-submit的There is insufficient memory for the Java Runtime Environment to continue.(老顽固问题) failed; error='Cannot allocate memory' (errno=12) 标签:sed view format commit modify openjdk target 网上 rmi 原文地址:https://www.cnblogs.com/wanpi/p/14335590.html21/01/27 14:55:48 INFO spark.SecurityManager: Changing modify acls groups to:

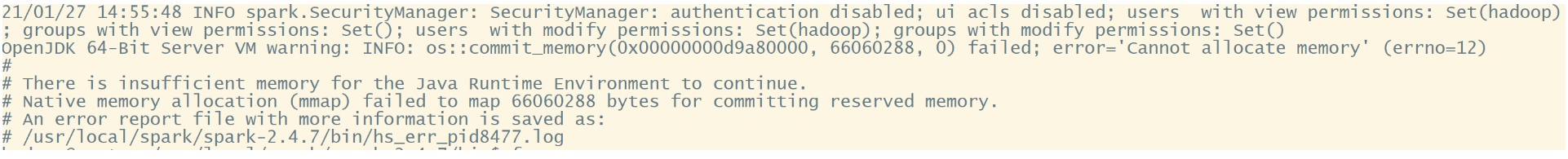

21/01/27 14:55:48 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

OpenJDK 64-Bit Server VM warning: INFO: os::commit_memory(0x00000000d9a80000, 66060288, 0) failed; error=‘Cannot allocate memory‘ (errno=12)

#

# There is insufficient memory for the Java Runtime Environment to continue.

# Native memory allocation (mmap) failed to map 66060288 bytes for committing reserved memory.

# An error report file with more information is saved as:

# /usr/local/spark/spark-2.4.7/bin/hs_err_pid8477.log

A:解决办法hadoop@master:/usr/local/spark/spark-2.4.7/bin$ free -m

total used free shared buff/cache available

Mem: 3757 3467 201 2 87 128

Swap:

2.1、减小服务中对JVM的内存配置。

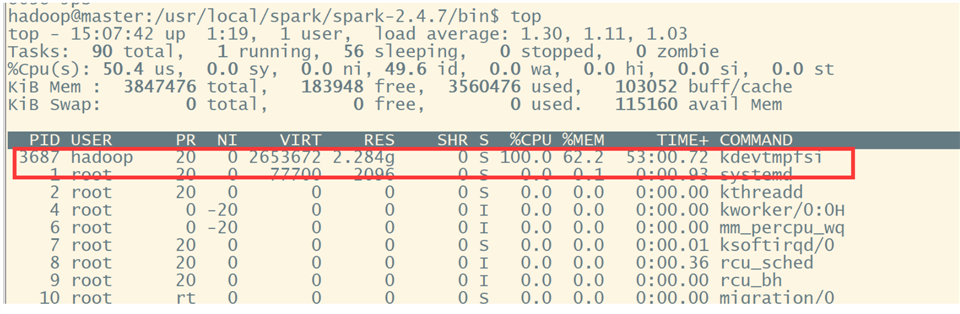

2.2、关必一些不必要的且占用内存大的进程。{linux上利用top命令查看所有进程,看哪些进程占用的内存太大,选择性的kill,释放内存,注意:安歇进程是不需要的。}

2.3、扩展内存。hadoop@master:/usr/local/spark/spark-2.4.7/bin$ top

top - 15:07:42 up 1:19, 1 user, load average: 1.30, 1.11, 1.03

Tasks: 90 total, 1 running, 56 sleeping, 0 stopped, 0 zombie

%Cpu(s): 50.4 us, 0.0 sy, 0.0 ni, 49.6 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 3847476 total, 183948 free, 3560476 used, 103052 buff/cache

KiB Swap: 0 total, 0 free, 0 used. 115160 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

3687 hadoop 20 0 2653672 2.284g 0 S 100.0 62.2 53:00.72 kdevtmpfsi

1 root 20 0 77700 2096 0 S 0.0 0.1 0:00.93 systemd

2 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kthreadd

罪魁祸首找到了。然后,kill掉占用内存高的java进程。再次提交任务就可以了!hadoop@master:/usr/local/spark/spark-2.4.7/bin$ kill -9 3687

hadoop@master:/usr/local/spark/spark-2.4.7/bin$ top

top - 15:08:07 up 1:20, 1 user, load average: 1.04, 1.06, 1.02

Tasks: 91 total, 1 running, 56 sleeping, 0 stopped, 0 zombie

%Cpu(s): 0.2 us, 0.0 sy, 0.0 ni, 99.8 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem : 3847476 total, 2578636 free, 1161816 used, 107024 buff/cache

KiB Swap: 0 total, 0 free, 0 used. 2511832 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

744 root 10 -10 146756 8344 0 S 0.3 0.2 0:15.89 AliYunDun

4113 hadoop 20 0 3540084 391160 8308 S 0.3 10.2 0:11.74 java

1 root 20 0 77700 2096 0 S 0.0 0.1 0:00.93 systemd

2 root 20 0 0 0 0 S 0.0 0.0 0:00.00 kthreadd

4 root 0 -20 0 0 0 I 0.0 0.0 0:00.00 kworker/0:0H

文章标题:解决spark-submit的There is insufficient memory for the Java Runtime Environment to continue.(老顽固问题) fa

文章链接:http://soscw.com/index.php/essay/60243.html