Textual Entailment(自然语言推理-文本蕴含) - AllenNLP

2020-12-13 15:16

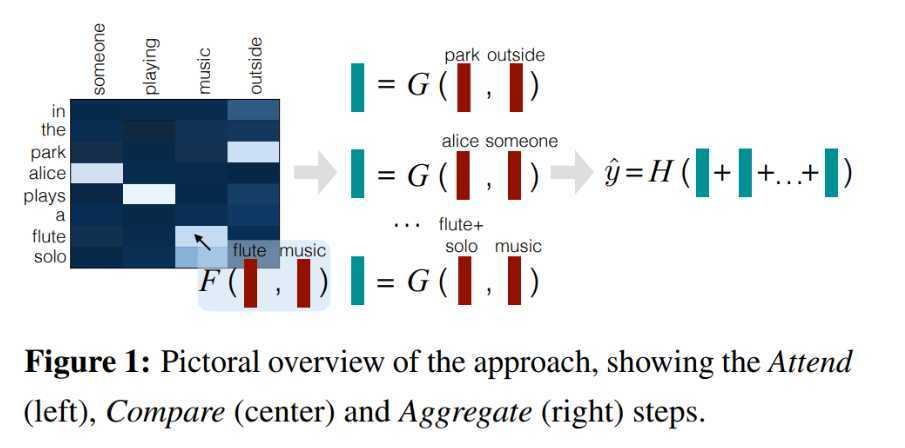

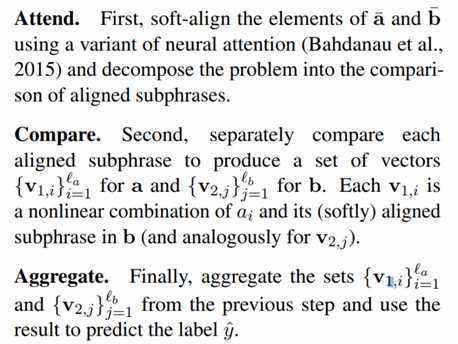

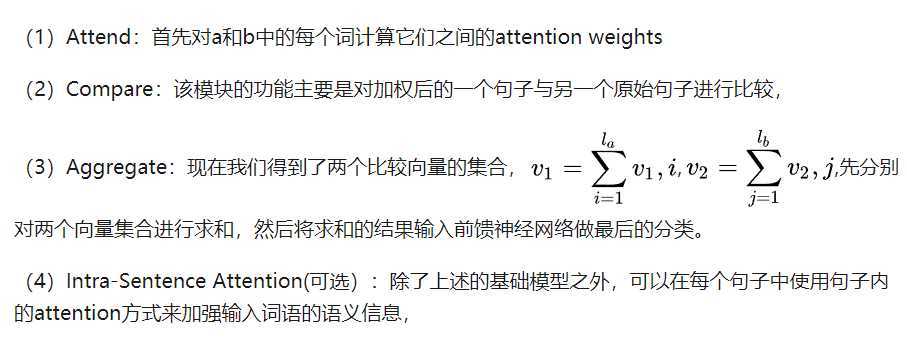

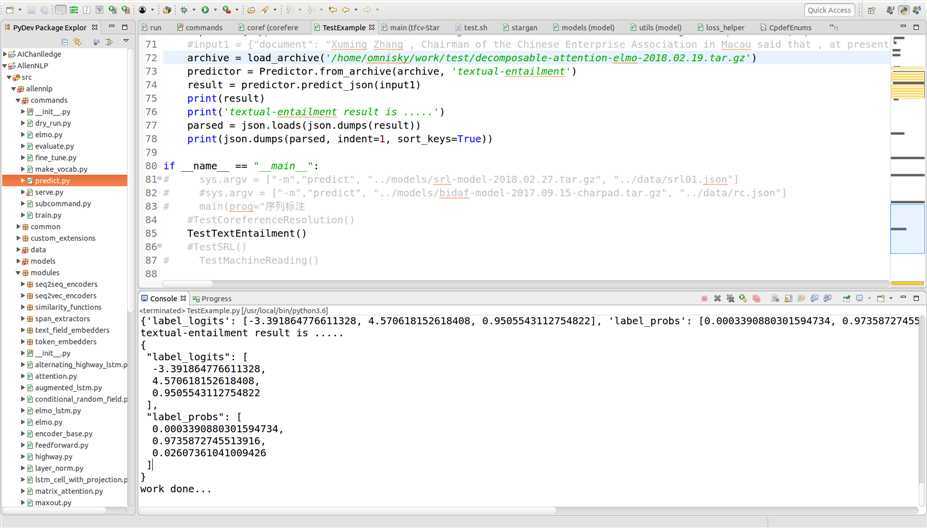

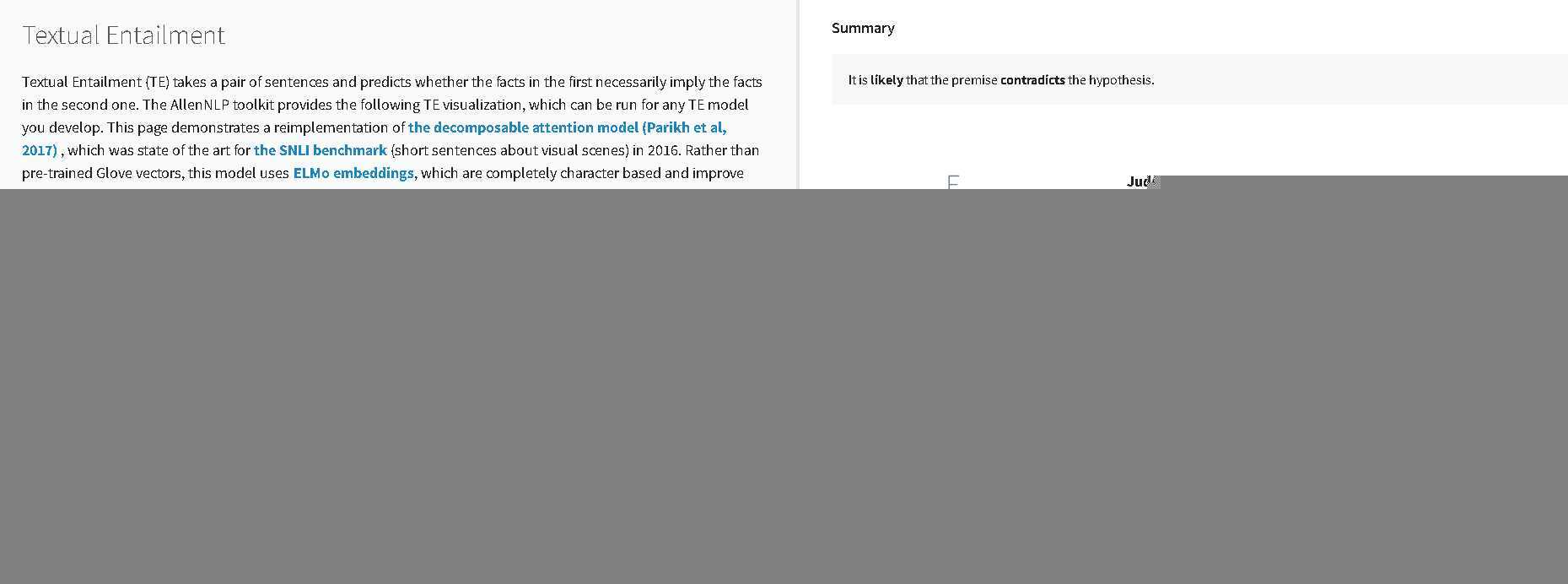

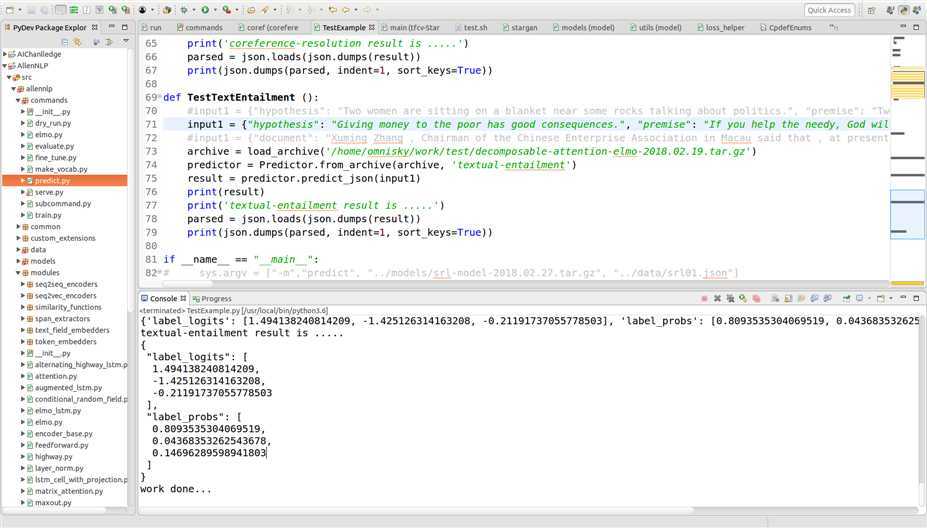

标签:hat pos ons embedding 比较 mic 表示 bsp lin 自然语言推理是NLP高级别的任务之一,不过自然语言推理包含的内容比较多,机器阅读,问答系统和对话等本质上都属于自然语言推理。最近在看AllenNLP包的时候,里面有个模块:文本蕴含任务(text entailment),它的任务形式是: 给定一个前提文本(premise),根据这个前提去推断假说文本(hypothesis)与premise的关系,一般分为蕴含关系(entailment)和矛盾关系(contradiction),蕴含关系(entailment)表示从premise中可以推断出hypothesis;矛盾关系(contradiction)即hypothesis与premise矛盾。文本蕴含的结果就是这几个概率值。 Textual Entailment AllenNLP集成了EMNLP2016中谷歌作者们撰写的一篇文章:A Decomposable Attention Model for Natural Language Inference 论文实践 (1)测试例子一: 前提:Two women are wandering along the shore drinking iced tea. 假设:Two women are sitting on a blanket near some rocks talking about politics. 其测试结果如下: 可视化呈现结果如下: 测试例子二: 前提:If you help the needy, God will reward you. 假设:Giving money to the poor has good consequences. 测试结果如下: Textual Entailment(自然语言推理-文本蕴含) - AllenNLP 标签:hat pos ons embedding 比较 mic 表示 bsp lin 原文地址:https://www.cnblogs.com/shona/p/11577273.html

Textual Entailment (TE) models take a pair of sentences and predict whether the facts in the first necessarily imply the facts in the second one. The AllenNLP TE model is a re-implementation of the decomposable attention model (Parikh et al, 2017), a widely used TE baseline that was state-of-the-art onthe SNLI dataset in late 2016. The AllenNLP TE model achieves an accuracy of 86.4% on the SNLI 1.0 test dataset, a 2% improvement on most publicly available implementations and a similar score as the original paper. Rather than pre-trained Glove vectors, this model uses ELMo embeddings, which are completely character based and account for the 2% improvement.

文章标题:Textual Entailment(自然语言推理-文本蕴含) - AllenNLP

文章链接:http://soscw.com/essay/34961.html